Azure Cosmos DB

Azure Cosmos DB is a fully managed NoSQL and relational database for modern app development offering single-digit millisecond response times, automatic and instant scalability, along with guaranteed speed at any scale. This integration helps in monitoring the overall performance, failures, capacity, and operational health of all your Azure Cosmos DB resources.

The below instructions applies to the following database APIs:

- NoSQL

- MongoDB

- Cassandra

- Gremlin

- Table

This app is tested with Azure Cosmos DB for MongoDB, Cassandra, and NoSQL database account types.

Log and metric types

For Azure Cosmos DB, you can collect the following logs and metrics:

-

Resource logs. To learn more about the different resource log category types and schemas collected for Azure Cosmos DB, refer to the Azure documentation.

-

Platform Metrics for Azure Cosmos DB. For more information on supported metrics and dimensions, refer to the Azure documentation. These metrics are available in the following namespaces:

Microsoft.DocumentDB/applicationGatewaysMicrosoft.DocumentDB/cassandraClustersMicrosoft.DocumentDB/mongoClusters

-

Activity logs. Provides insight into any subscription-level or management group level events that have occurred in the Azure. To learn more, refer to the Azure documentation.

Setup

Azure service sends monitoring data to Azure Monitor, which can then stream data to Eventhub. Sumo Logic supports:

- Logs collection from Azure Monitor using our Azure Event Hubs source.

- Metrics collection using our Azure Metrics Source.

You must explicitly enable diagnostic settings for each Azure Cosmos DB account you want to monitor. You can forward logs to the same Event Hub provided they satisfy the limitations and permissions as described here.

When you configure the Event Hubs source or HTTP source, plan your source category to ease the querying process. A hierarchical approach allows you to make use of wildcards. For example: Azure/CosmosDB/Logs, Azure/CosmosDB/Metrics.

Configure collector

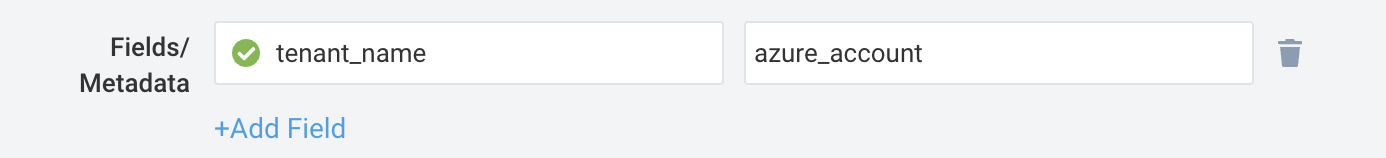

Create a hosted collector if not already configured and tag the tenant_name field. You can get the tenant name using the instructions here. Make sure you create the required sources in this collector.

Configure metrics collection

To set up the Azure Metrics source in Sumo Logic, refer to Azure Metrics Source.

Configure logs collection

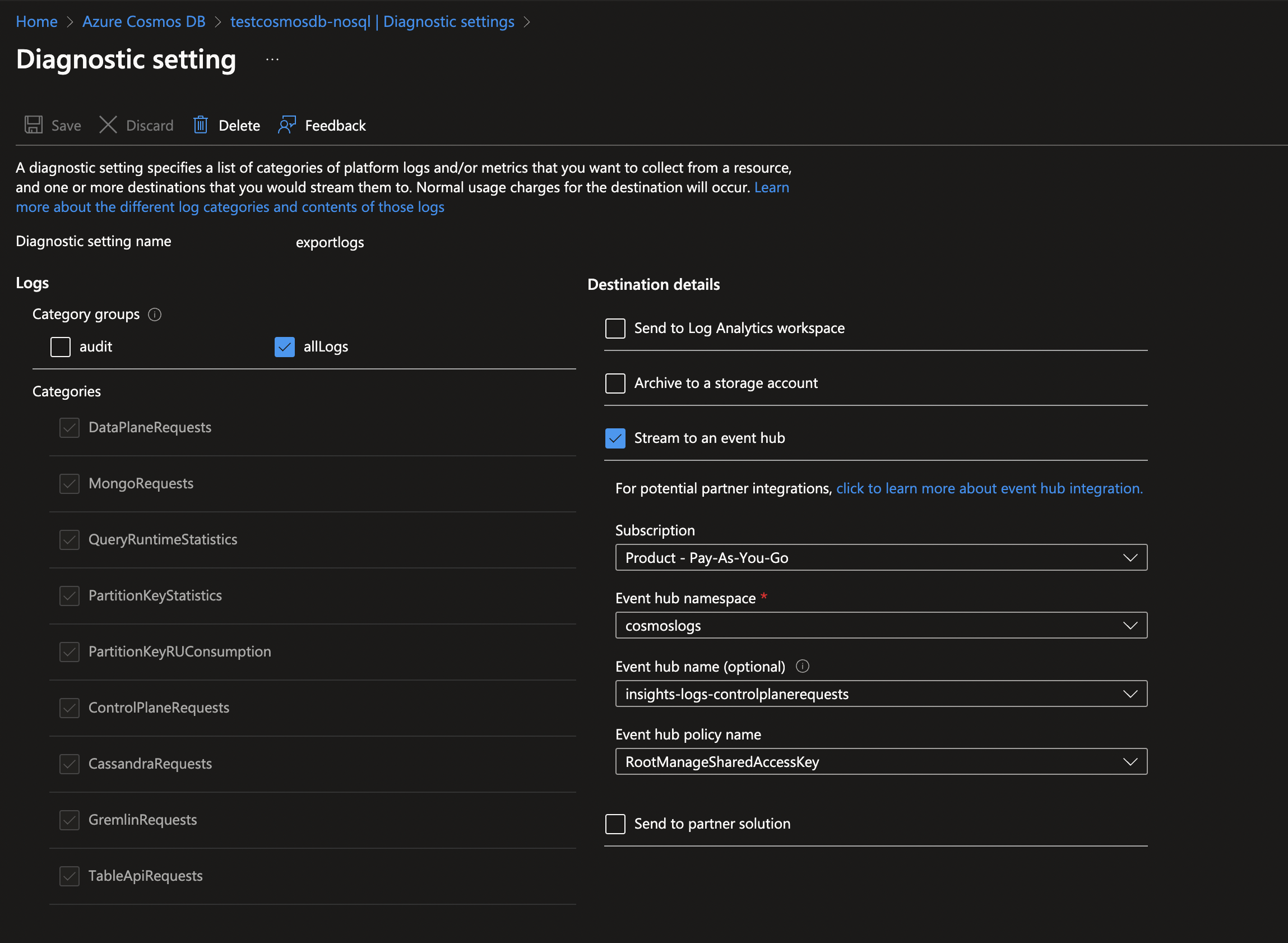

In this section, you will configure a pipeline for shipping diagnostic logs from Azure Monitor to an Event Hub.

- To set up the Azure Event Hubs source in Sumo Logic, refer to Azure Event Hubs Source for Logs.

- If you want to audit Azure Cosmos DB control plane operations, disable the key based metadata write access.

- To create the diagnostic settings in Azure portal, refer to the Azure documentation. Perform the following steps for each Azure Cosmos DB account that you want to monitor.

- Choose Stream to an event hub as the destination.

- Select your preferred log categories depending upon your database API or select allLogs.

- Use the Event Hub namespace and Event Hub name configured in the previous step in the destination details section. You can use the default policy

RootManageSharedAccessKeyas the policy name.

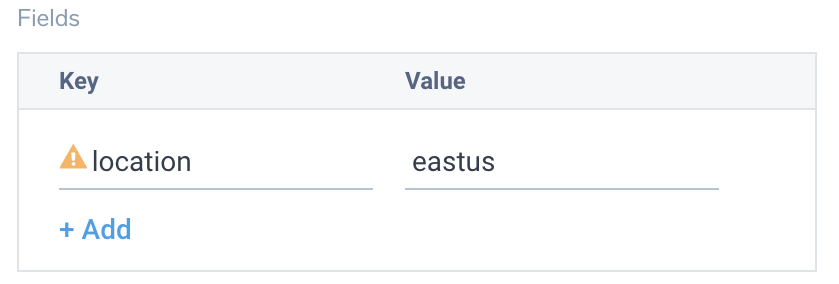

- Tag the location field in the source with right location value.

Activity logs (optional)

To collect activity logs, follow the instructions here. If you are already collecting activity logs for a subscription, you can skip this step.

Since this source includes logs from multiple regions, do not tag it with the location tag.

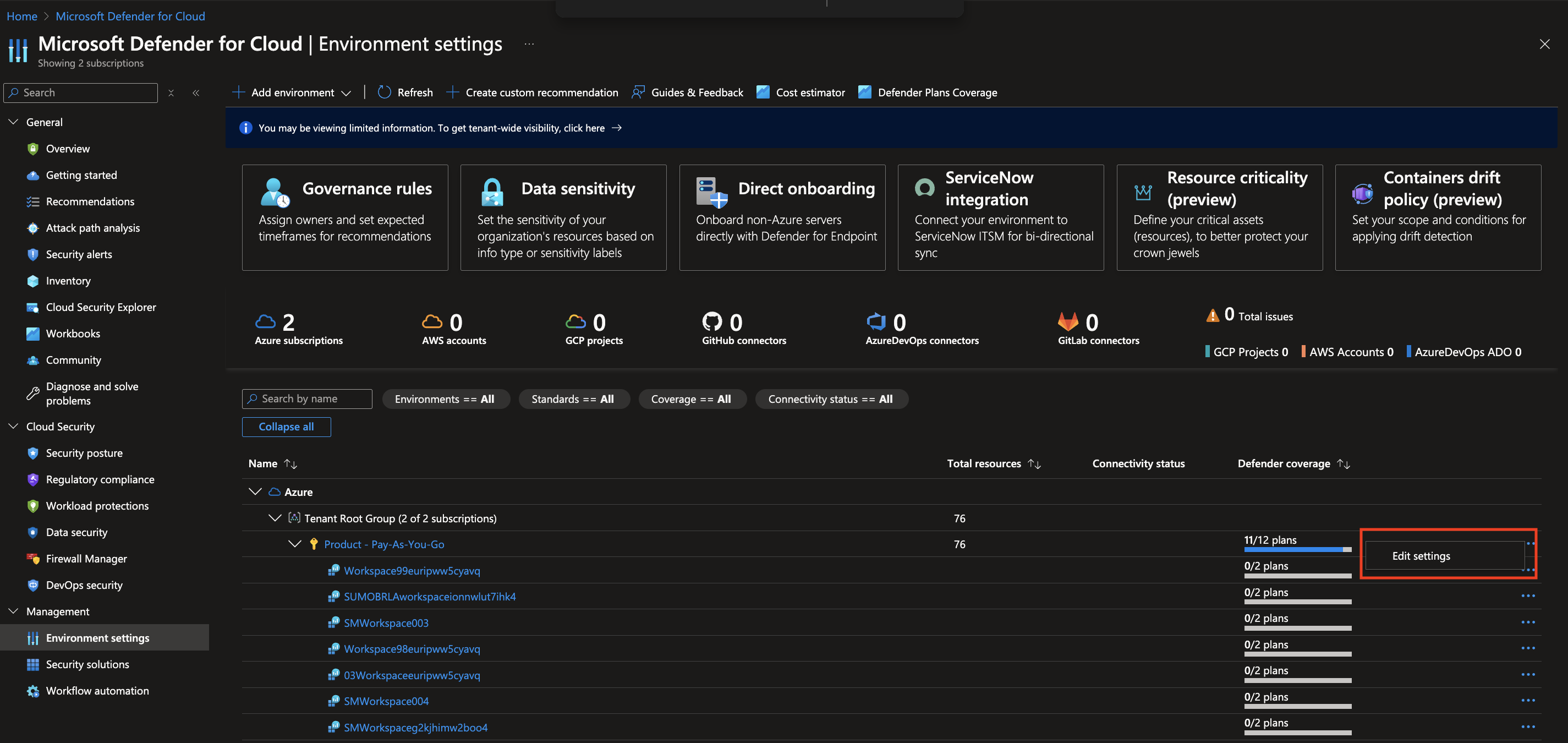

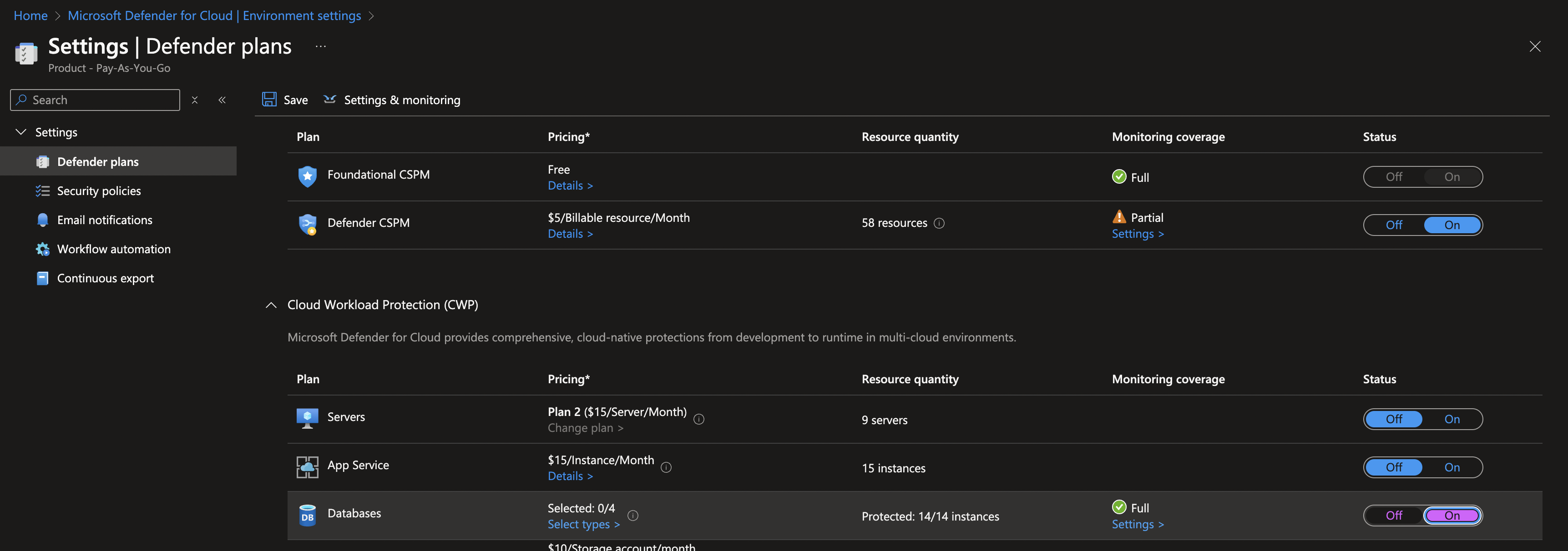

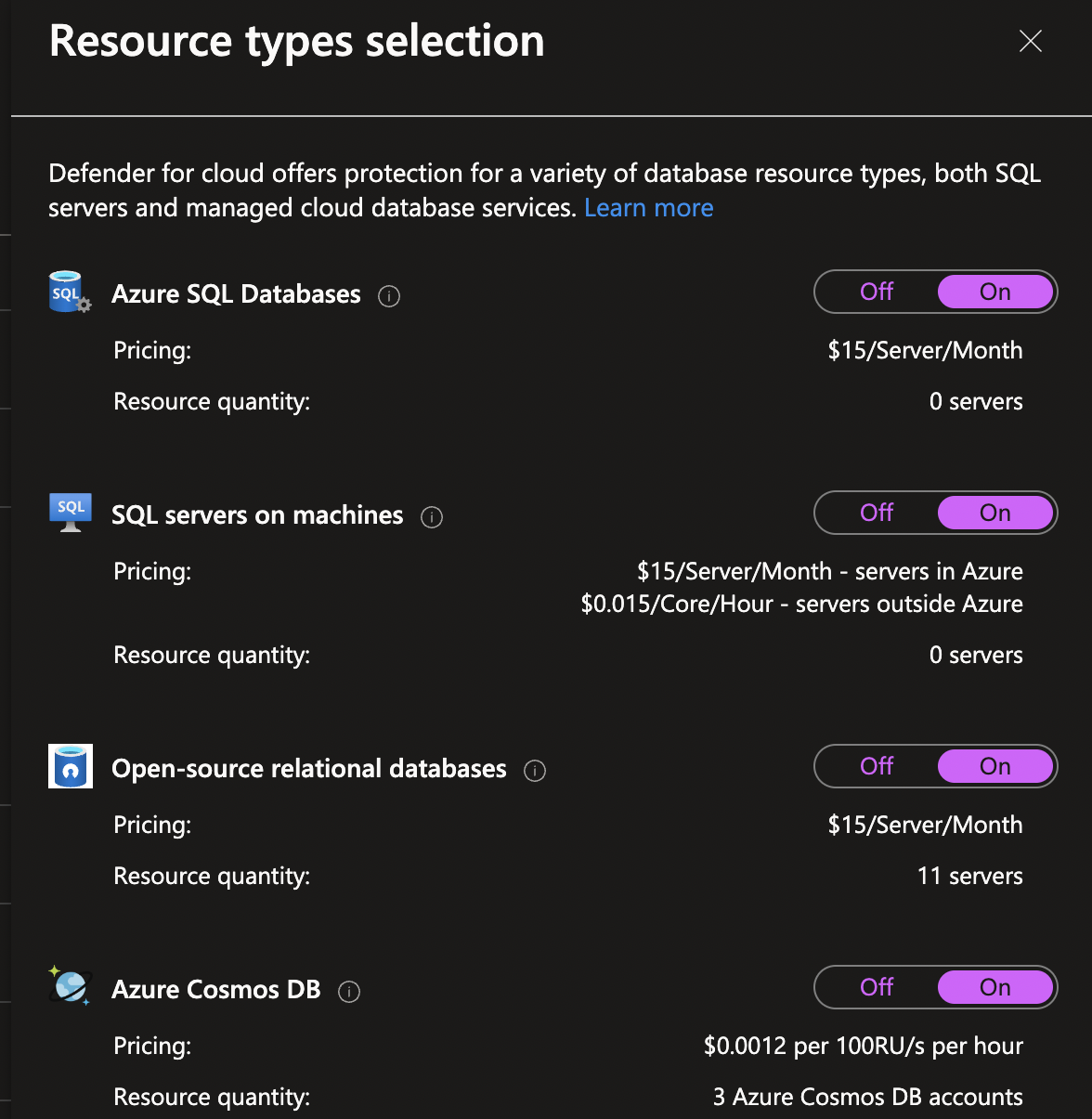

Enabling Microsoft Defender for Cloud

For security events, make sure you enable Microsoft Defender for Cloud. In the Defender Plans Settings page toggle the Databases status under the Cloud Workload Protection section and select the resource types.

Installing the Azure Cosmos DB app

This section provides instructions on how to install the Azure Cosmos DB app, and shows examples of each of the preconfigured dashboards you can use to analyze your data.

To install the app, do the following:

Next-Gen App: To install or update the app, you must be an account administrator or a user with Manage Apps, Manage Monitors, Manage Fields, Manage Metric Rules, and Manage Collectors capabilities depending upon the different content types part of the app.

- Select App Catalog.

- In the 🔎 Search Apps field, run a search for your desired app, then select it.

- Click Install App.

note

Sometimes this button says Add Integration.

- Click Next in the Setup Data section.

- In the Configure App section of your respective app, complete the following field.

- Index. Specify value for _index if the collection is configured with custom partition. Learn more. Default value is set to

sumologic_default(default partition)

- Index. Specify value for _index if the collection is configured with custom partition. Learn more. Default value is set to

- Click Next. You will be redirected to the Preview & Done section.

Post-installation

Once your app is installed, it will appear in your Installed Apps folder, and dashboard panels will start to fill automatically.

Each panel slowly fills with data matching the time range query received since the panel was created. Results will not immediately be available but will be updated with full graphs and charts over time.

As part of the app installation process, the following fields will be created by default:

tenant_name. This field is tagged at the collector level. You can get the tenant name using the instructions here.location. The region the resource name belongs to.subscription_id. ID associated with a subscription where the resource is present.resource_group. The resource group name where the Azure resource is present.provider_name. Azure resource provider name (for example, Microsoft.Network).resource_type. Azure resource type (for example, storage accounts).resource_name. The name of the resource (for example, storage account name).service_type. Type of the service that can be accessed with an Azure resource.service_name. Services that can be accessed with an Azure resource (for example, in Azure Container Instances the service is Subscriptions).

Viewing the Azure Cosmos DB dashboards

All dashboards have a set of filters that you can apply to the entire dashboard. Use these filters to drill down and examine the data to a granular level.

- You can change the time range for a dashboard or panel by selecting a predefined interval from a drop-down list, choosing a recently used time range, or specifying custom dates and times. Learn more.

- You can use template variables to drill down and examine the data on a granular level. For more information, see Filtering Dashboards with Template Variables.

- Many of the Next-Gen apps allow you to provide the Index at the installation time and a default value for this key (sumologic_default). Based on your input, the app dashboards will be parameterized with a dashboard variable, allowing you to change the data partition queried by all panels. This restricts the query scope of all the dashboard queries to a specific data partition.

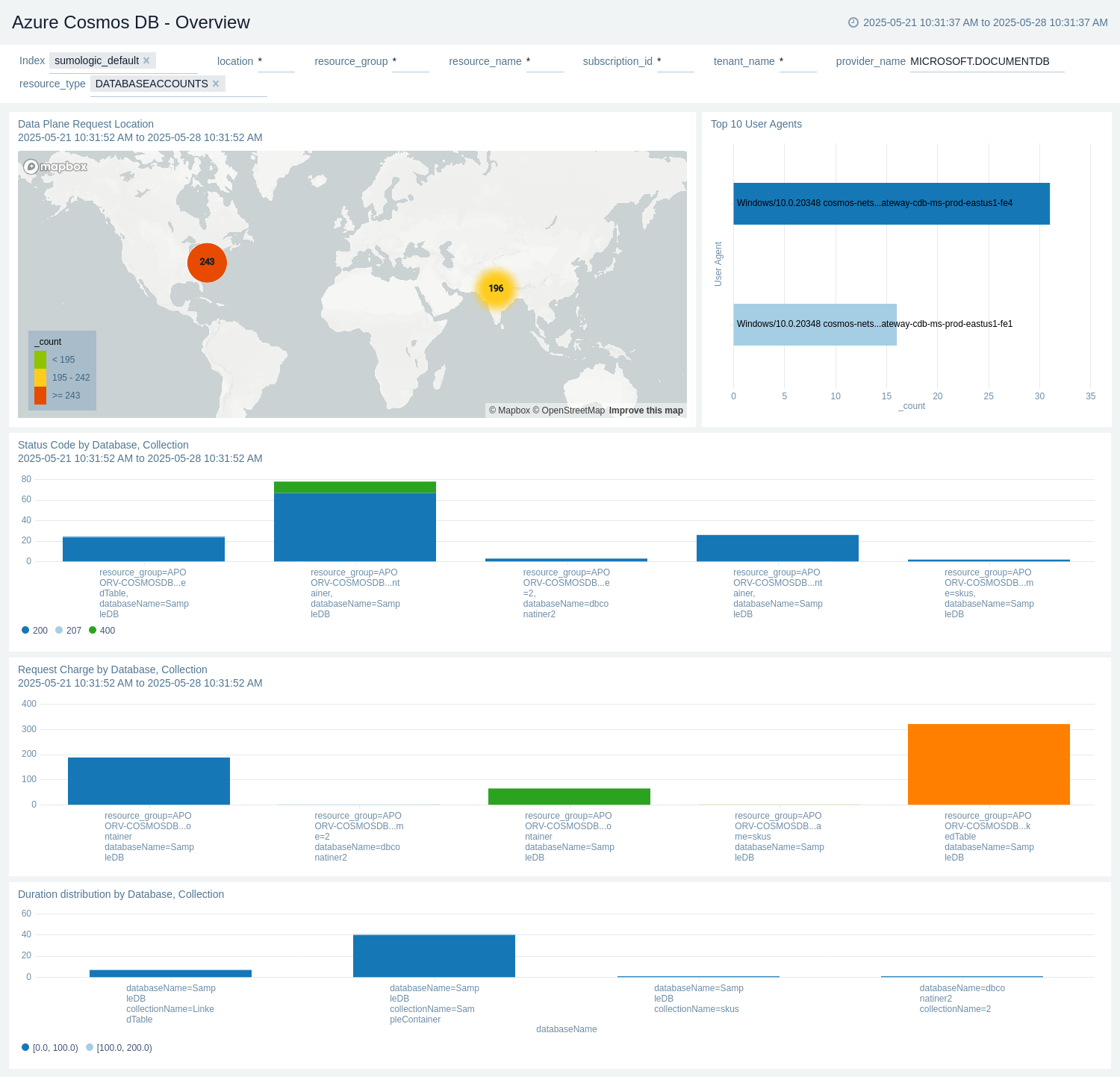

Overview

The Azure Cosmos DB - Overview dashboard provides details about RU (Request Unit) consumption, duration, status code distribution across database and collection.

Use this dashboard to:

- Track data plane request locations for cross region calls.

- Monitor request units, duration consumed across database and collection.

- Identify failed requests across database and collection.

Throughput

The Azure Cosmos DB - Throughput dashboard provides details about Request Unit (RU) consumption for logical partition keys in each region, within each of their physical partitions.

Use this dashboard to:

- Identify hot partitions from a request volume perspective.

- Track request units consumed by each database.

Audit

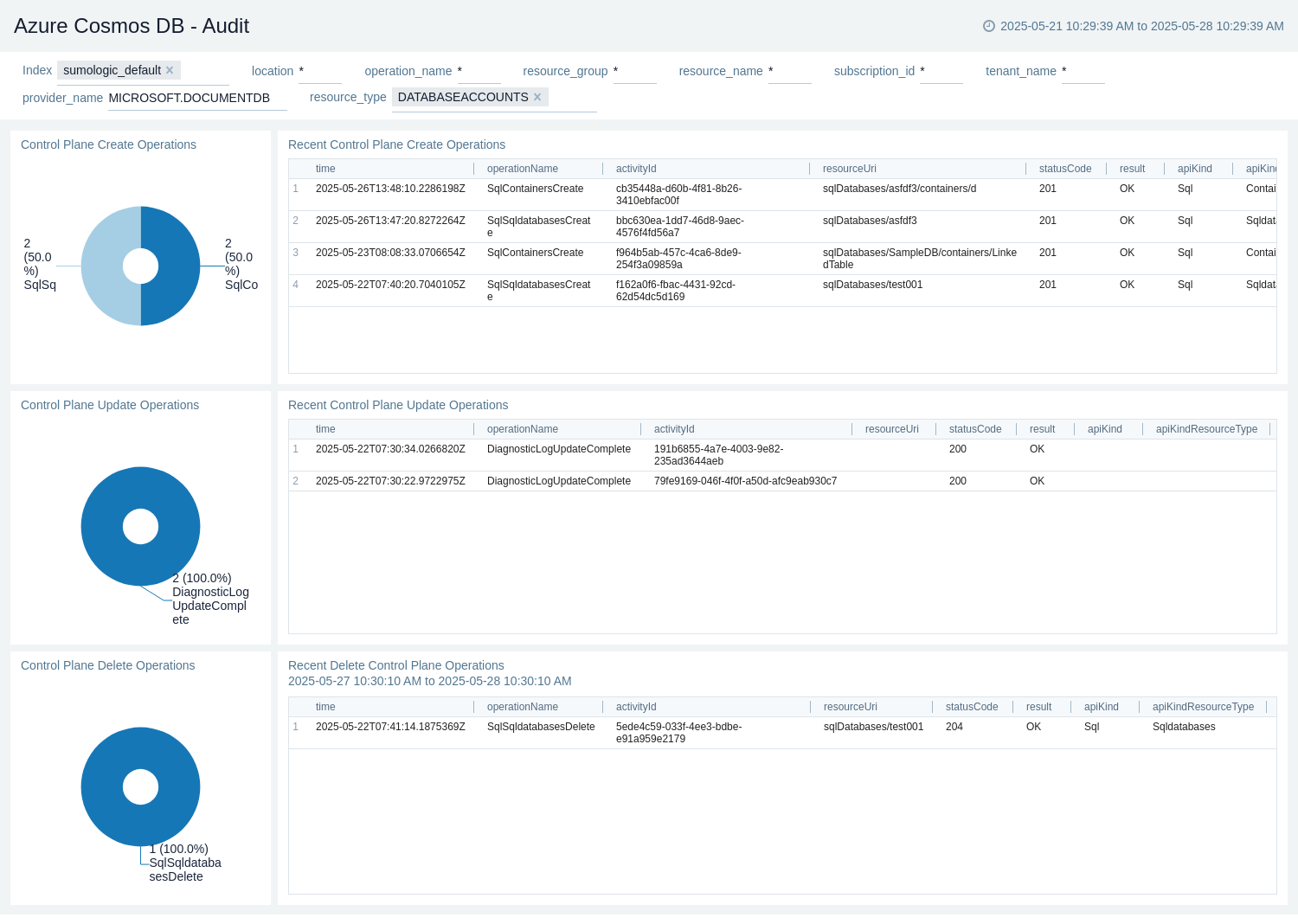

The Azure Cosmos DB - Audit dashboard provides details about all control plane operations executed on the account.

Use this dashboard to:

- Monitor control plane requests which includes modifications to the regional failover policy, indexing policy, IAM role assignments, backup/restore policies, VNet and firewall rules, private links as well as updates, and deletes of the account.

- Monitor data plane operations executed to create, update, delete, or retrieve data within the account.

- Use operation name filter to track important events like network settings update, account key rotations, provisioned throughput changes and replication settings update.

Storage

The Azure Cosmos DB - Storage dashboard provides details about data usage, document count, and physical partition size by database.

Use this dashboard to:

- Identify logical partition keys that have consumed more storage space than others.

- Track document count and data usage.

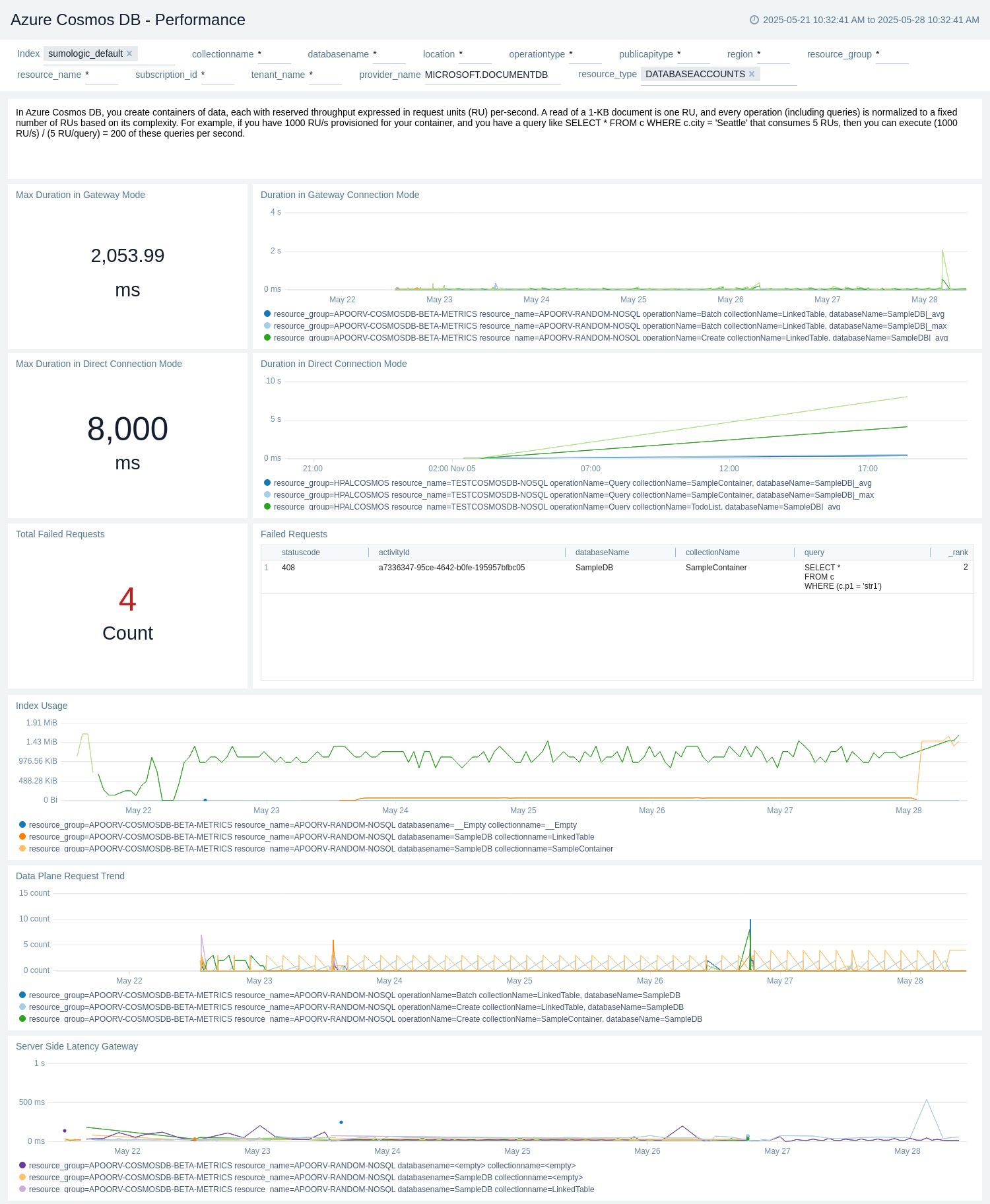

Performance

The Azure Cosmos DB - Performance dashboard provides insights into the performance of your Azure Cosmos DB databases. This includes metrics on query duration, server side latency, and failed queries.

Use this dashboard to:

- Monitor and analyze the failed queries of your Azure Cosmos DB.

- Identify performance bottlenecks and optimize query execution.

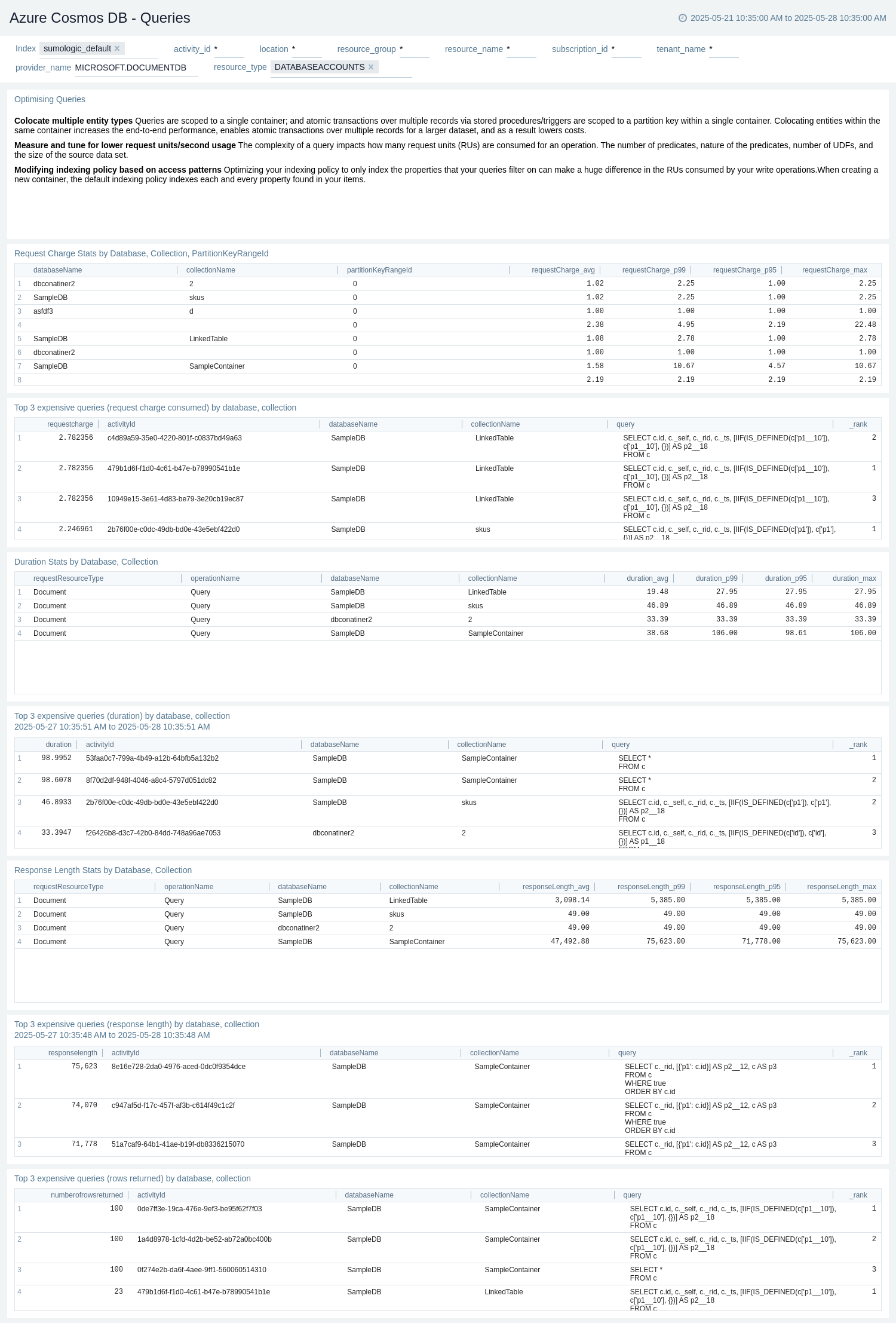

Queries

The Azure Cosmos DB - Queries dashboard provides insights into the queries executed in your Azure Cosmos DB databases.

Use this dashboard to:

- Analyze query distribution across duration, request charge, response length.

- Identify query bottlenecks and optimize query execution.

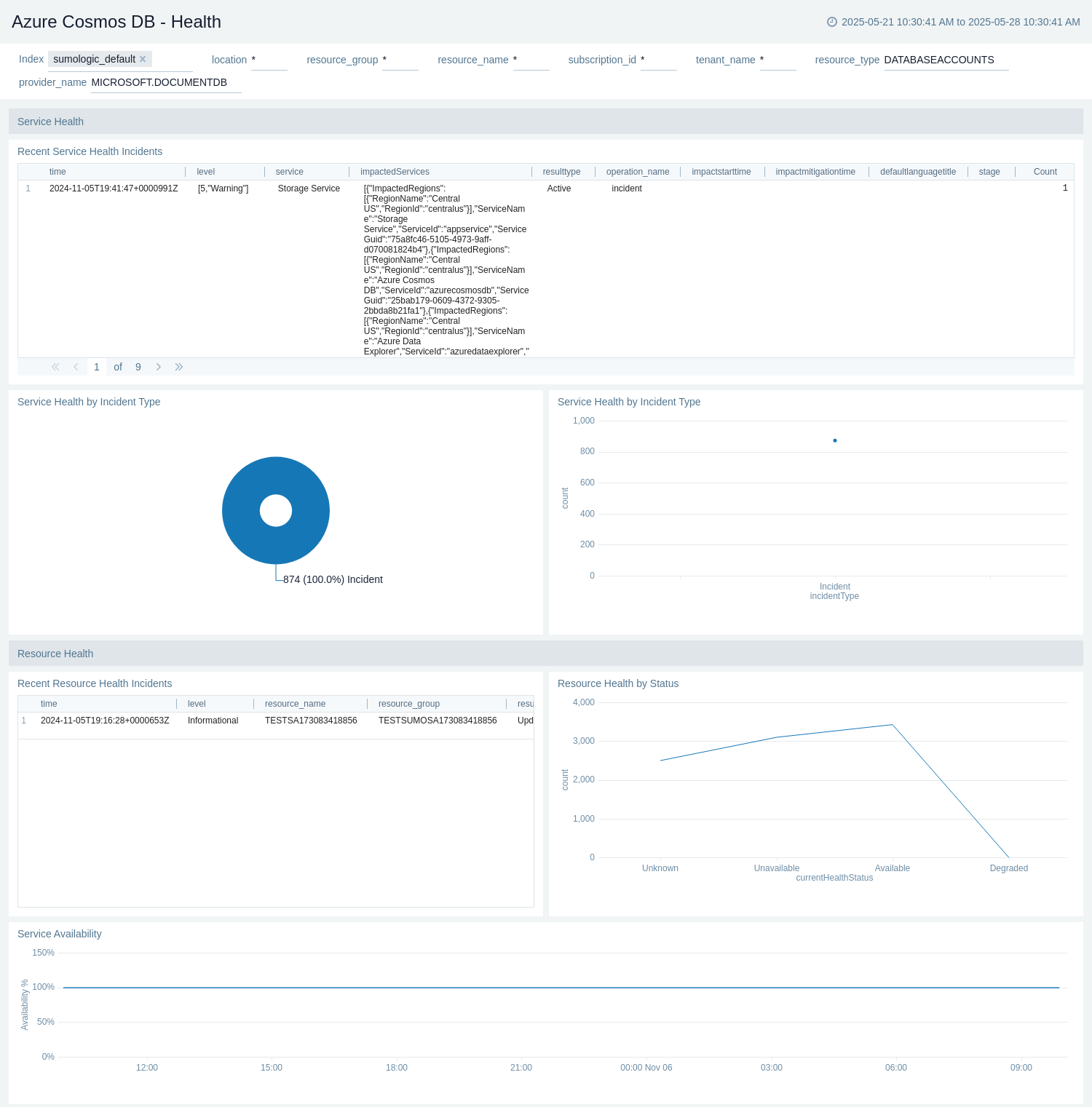

Health

The Azure Cosmos DB - Health dashboard provides information of any service health incidents or resource health events associated with Azure Cosmos DB accounts in your azure account.

Use this dashboard to:

- View recent resource and service health incidents.

- View distribution of service and resource health by incident type.

- Monitor service availability.

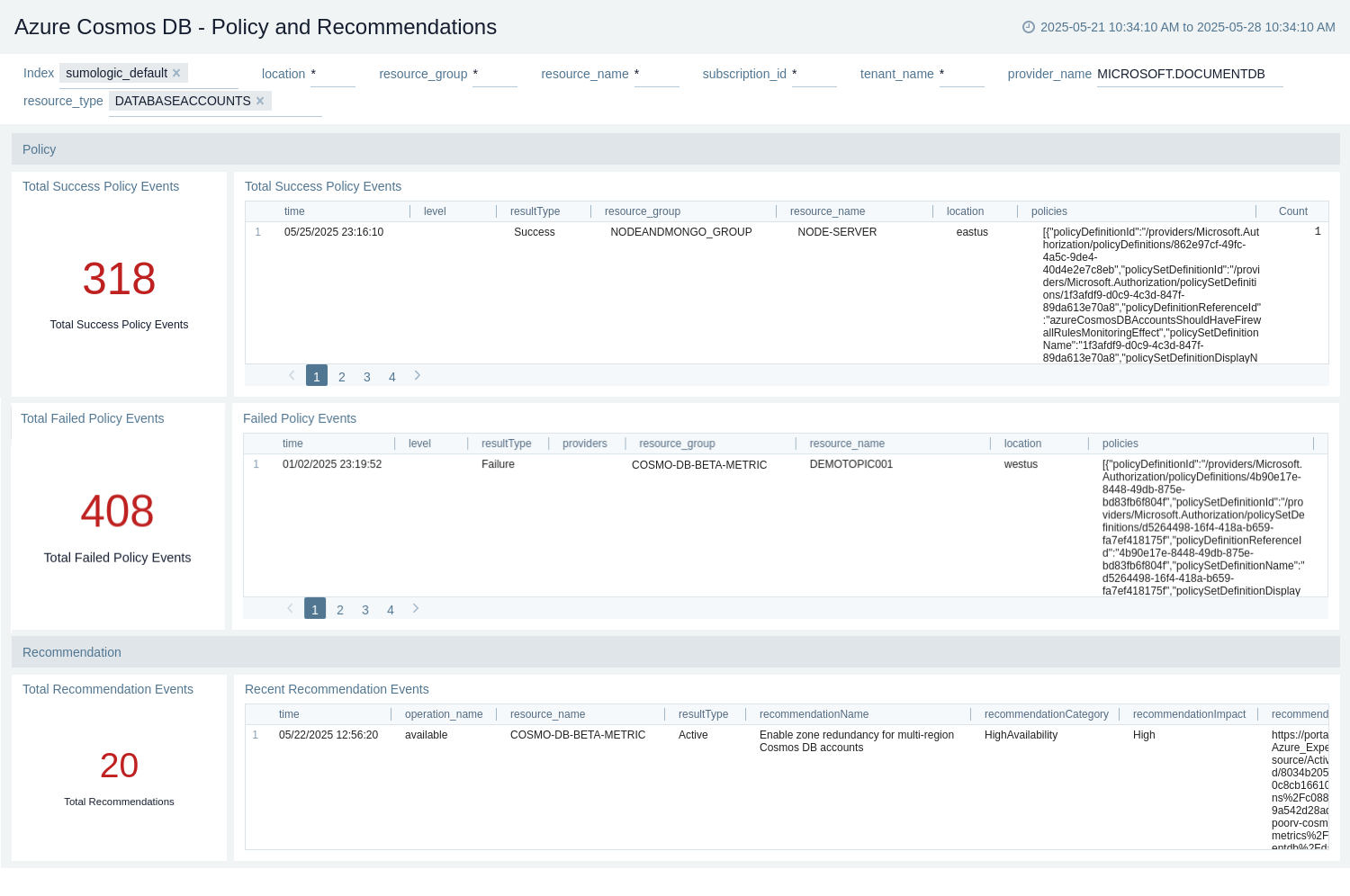

Policy and Recommendations

The Azure Cosmos DB - Policy and Recommendations dashboard provides information of all effect action operations performed by Azure policy and recommendations events from Azure Advisor.

Use this dashboard to:

- Monitor policy events with warnings and errors.

- View recent failed policy events.

- View total recommendation events.

- Identify high impact recommendations.

- View recent recommendation events and navigate to the affected resource.

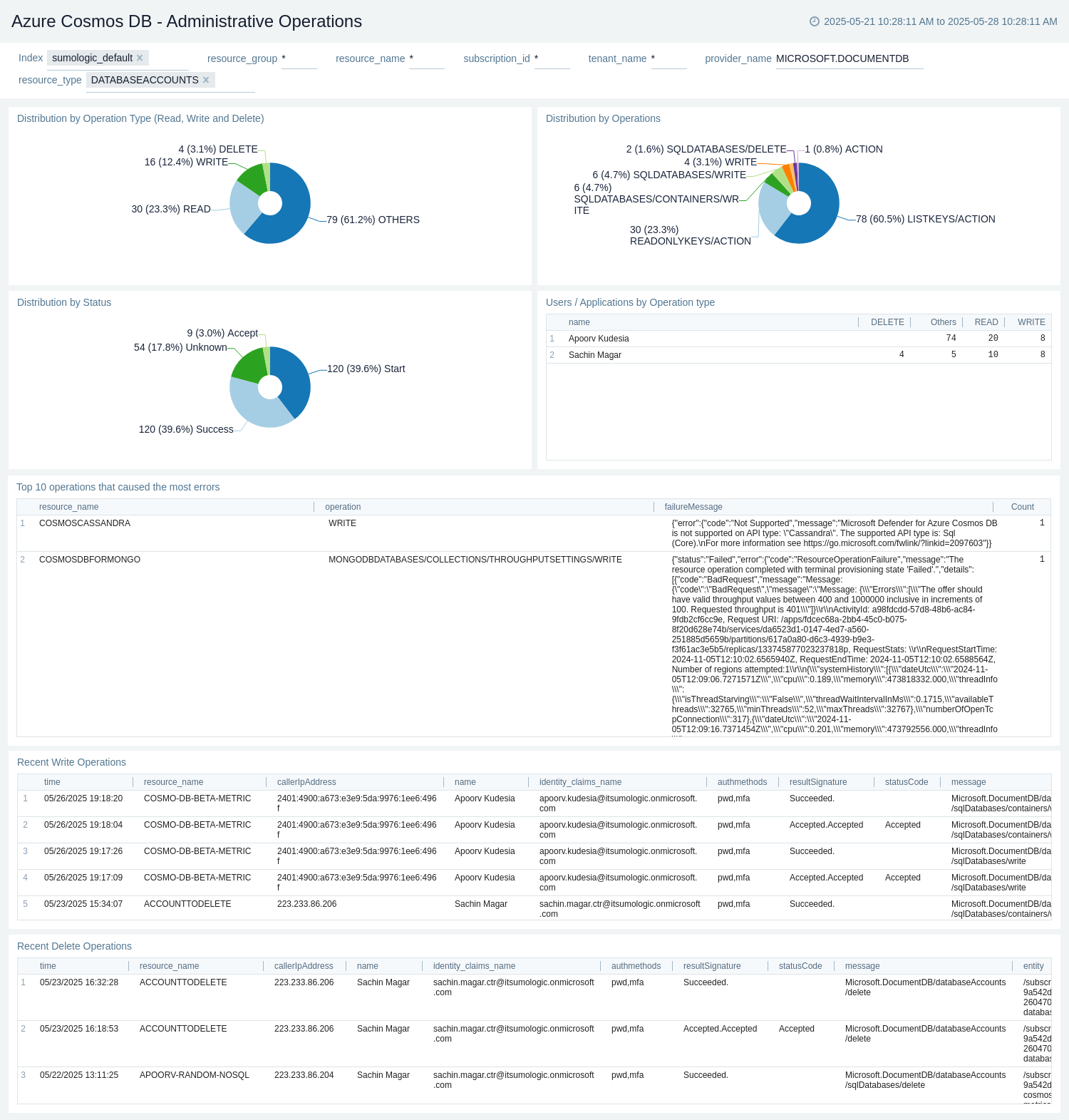

Administrative Operations

The Azure Cosmos DB - Administrative Operations dashboard provides details on users performing admin operations.

Use this dashboard to:

- Identify top users performing administrative operations.

- View top 10 operations that caused the most errors.

- View recent diagnostic, network, and replication settings updates operations.

Partitions

The Azure Cosmos DB - Partitions dashboard provides details about partitions count, throughput, and physical partition size by database.

Use this dashboard to:

- Monitor partition size and their throughput throughout the database.

- View partitions allocated to different DB's and collections.

- Track partition count and usage.

Upgrade/Downgrade the Azure Cosmos DB app (optional)

To update the app, do the following:

Next-Gen App: To install or update the app, you must be an account administrator or a user with Manage Apps, Manage Monitors, Manage Fields, Manage Metric Rules, and Manage Collectors capabilities depending upon the different content types part of the app.

- Select App Catalog.

- In the Search Apps field, search for and then select your app.

Optionally, you can identify apps that can be upgraded in the Upgrade available section. - To upgrade the app, select Upgrade from the Manage dropdown.

- If the upgrade does not have any configuration or property changes, you will be redirected to the Preview & Done section.

- If the upgrade has any configuration or property changes, you will be redirected to the Setup Data page.

- In the Configure section of your respective app, complete the following fields.

- Field Name. If you already have collectors and sources set up, select the configured metadata field name (eg _sourcecategory) or specify other custom metadata (eg: _collector) along with its metadata Field Value.

- Click Next. You will be redirected to the Preview & Done section.

Post-update

Your upgraded app will be installed in the Installed Apps folder and dashboard panels will start to fill automatically.

See our Release Notes changelog for new updates in the app.

To revert the app to a previous version, do the following:

- Select App Catalog.

- In the Search Apps field, search for and then select your app.

- To version down the app, select Revert to < previous version of your app > from the Manage dropdown.

Uninstalling the Azure Cosmos DB app (optional)

To uninstall the app, do the following:

- Select App Catalog.

- In the 🔎 Search Apps field, run a search for your desired app, then select it.

- Click Uninstall.

Create monitors for Azure Cosmos DB

From your App Catalog:

- From the Sumo Logic navigation, select App Catalog.

- In the Search Apps field, search for and then select your app.

- Make sure the app is installed.

- Navigate to What's Included tab and scroll down to the Monitors section.

- Click Create next to the pre-configured monitors. In the create monitors window, adjust the trigger conditions and notifications settings based on your requirements.

- Scroll down to Monitor Details.

- Under Location click on New Folder.

note

By default, monitor will be saved in the root folder. So to make the maintenance easier, create a new folder in the location of your choice.

- Enter Folder Name. Folder Description is optional.

tip

Using app version in the folder name will be helpful to determine the versioning for future updates.

- Click Create. Once the folder is created, click on Save.

Azure Cosmos DB alerts

These alerts are metrics-based and will work for all Cosmos DB's.

| Alert Name | Description | Alert Condition | Recover Condition |

|---|---|---|---|

Azure Cosmos DB - Availability | This alert gets triggered when Availability drops below 100% in Azure Cosmos DB. | Count < 100 | Count >= 100 |

Azure Cosmos DB - Normalized RU Consumption | This alert gets triggered when high RU consumption usage is detected in Azure Cosmos DB. | Count >= 90 | Count < 90 |

Troubleshooting

Metrics collection via Azure Metrics Source

To troubleshoot metrics collection via Azure Metrics Source, follow the instructions in Troubleshooting Azure Metrics Source.