Azure Integration with ARM FAQ

This guide provides answers to frequently asked questions (FAQ) about integrating Azure into an enterprise environment using ARM (Azure Resource Manager) architecture.

Common Error Messages

- For Event Hub, see Event Hub error messages.

- For Blob Storage, see Blob Reader error messages.

Integration overview

For an introduction to Sumo Logic’s solution for obtaining application and infrastructure data (logs and metrics) for Azure services using Azure Monitor, see Azure Monitoring.

For an introduction to Sumo Logic's solution for collecting logs using an event-based pipeline from Azure Blob Storage to an HTTP source on Sumo Logic, see Azure Blob Storage.

General FAQ

This section provides answers for general questions regarding Azure integrations.

What types of logs does Azure support?

Azure provides a wide array of configurable security auditing and logging options, as listed in the Azure logging and auditing documentation.

Which node version is supported?

The Azure functions supports Node ~18. For information on how to change a node version, see the Azure Changing node version support article.

What happens if the template is re-deployed? Are the resources recreated? Is there any data loss?

- If the resource already exists in the resource group and its settings are unchanged, the operation results in no change.

- If you change the settings for a resource, the resource is provisioned with the new settings.

- If you attempt to update the location or type of an existing resource, the deployment fails with an error. Instead, deploy a new resource with the location or type that you need.

How do I route logs to different source categories based on log content?

For Blob Storage, do the following:

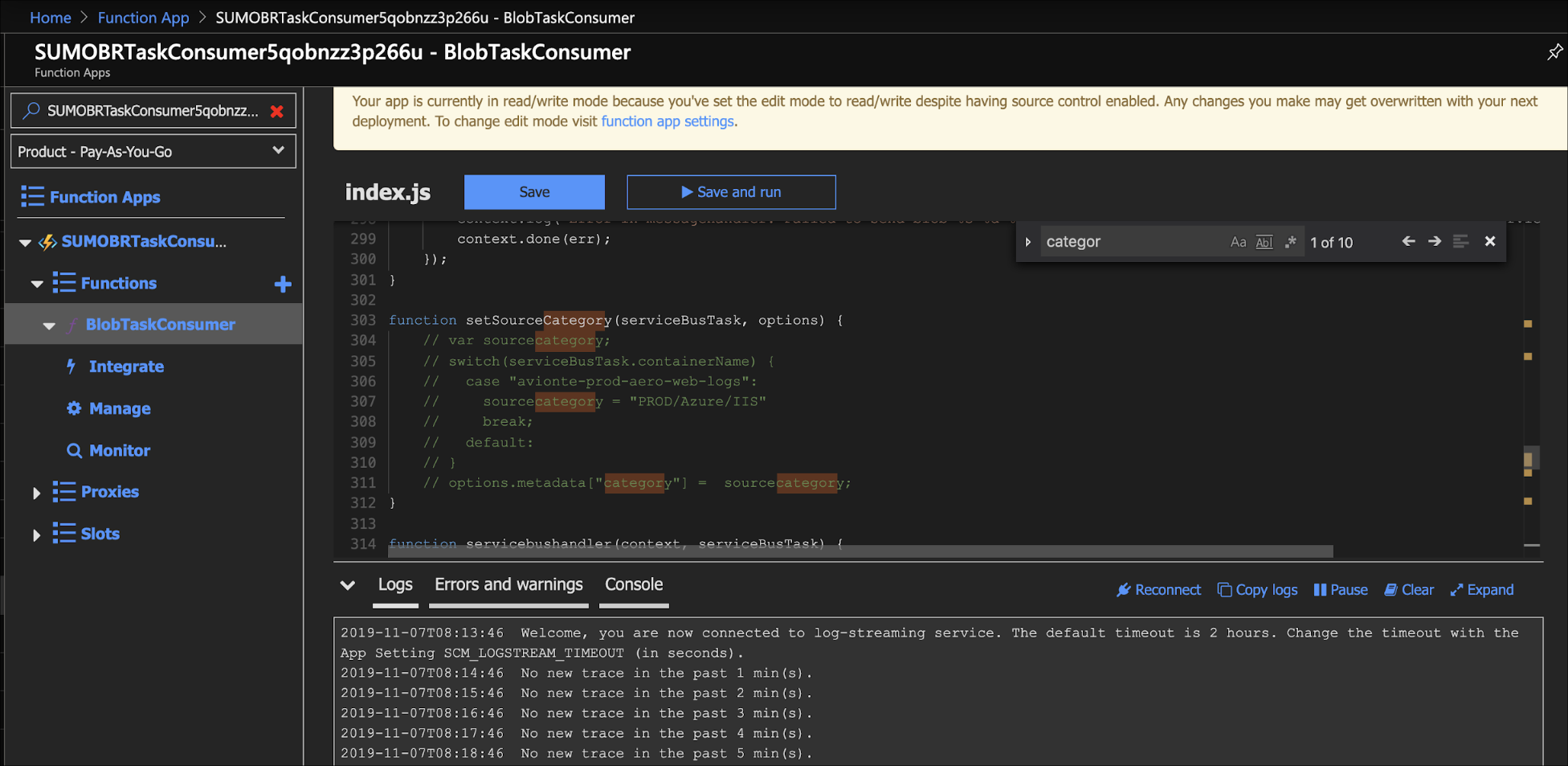

- Go to the BlockTaskConsumer function created by the ARM template.

- Enable Edit Mode and edit the getsourceCategory function to set the source category based on the metadata (

url,containerName,blobName,storageName,resourceGroupName,subscriptionId) present in serviceBusTask.

For Azure Monitor, you need to create an FER or create multiple sources.

The following is a Field Extraction Rule (FER) solution.

You extract the resource type in FERs and override the _sourceCategory with <custom source category for ex azure_logs/prod/NETWORKSECURITYGROUPS> so that when a user searches, the new sourcecategory is used. For example:

_sourceCategory = azure_logs | json auto | parse field=resource_id "/*/*"

as resource_type, resource_name | concat('azure_logs/prod/", resource_type) as _sourceCategory

How do I view Azure function logs?

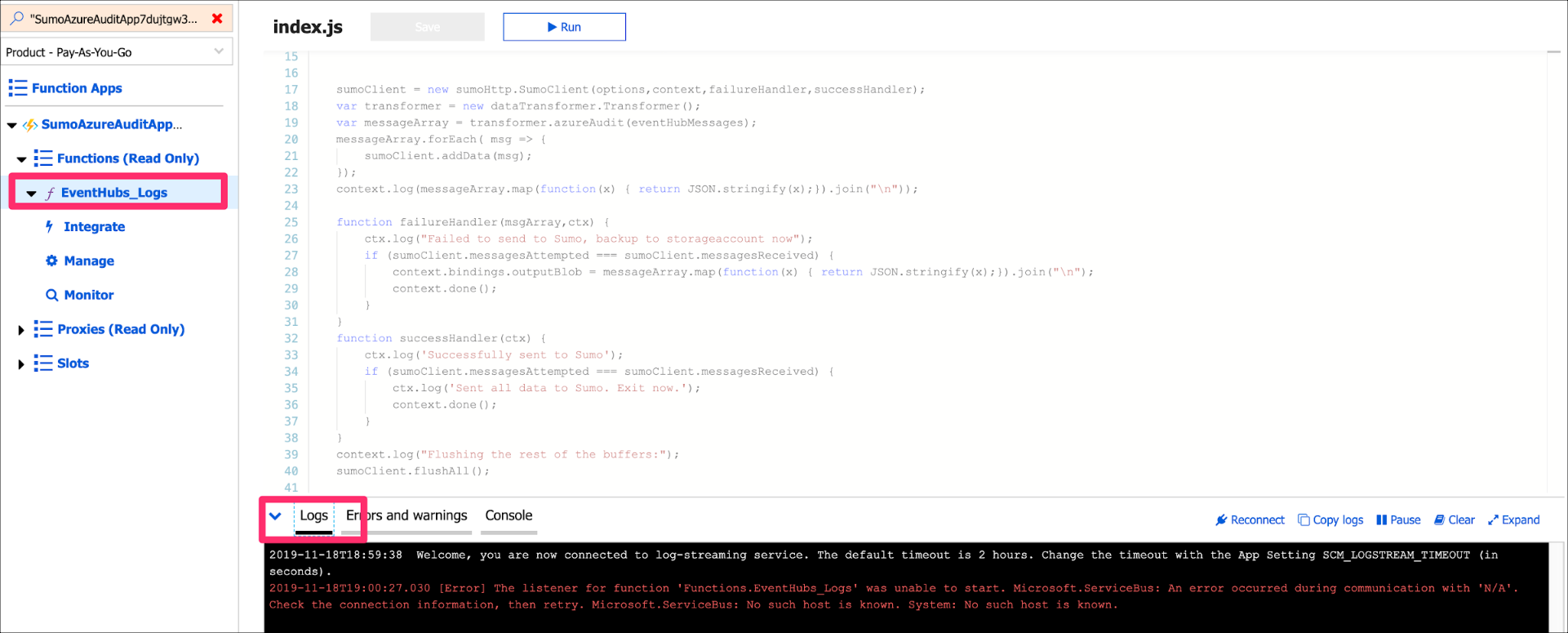

Go to the function and click the Logs tab to view real time logs, as shown in the following example.

How do I export Azure function logs?

All Azure functions are enabled with application insights. To export Azure function logs, do the following:

- Go to the Sumo Logic App Catalog and navigate to your Azure Function app.

- Go to Monitoring > Logs, and run the below query.

union isfuzzy=true

availabilityResults,

requests,

exceptions,

pageViews,

traces,

customEvents,

dependencies

| order by timestamp desc

| take 100 - Click the Export button to export the logs.

Event Hub FAQs

This page provides answers for frequently asked integration questions about Azure Event Hub.

What log sources are supported by Event Hub?

- Exporting data from Azure Monitor: Includes the majority of services, including SQL DB, EventHubs, Cosmos DB, Data Factory, KeyVault, and Stream Analytics export their logs and metrics to Azure Monitor.

- Security Alerts from Azure Security Center: Setup.

- Azure SQL (audit logs are in preview, Diagnostic logs/Activity logs can be exported to event hub).

- Virtual Machines Activity logs and Diagnostic logs Setup.

- Guest OS Monitoring Data via Agent: Setup.

- Azure Active Directory.

What if there is an existing storage account or Event Hub?

- The storage account creation limit per region, per subscription, is 200.

- The limit for the number of Event Hub per namespace is 10.

- You need to update ConnectionStrings (StorageConnectionString for storage account and AzureEventHubConnectionString for Event Hub) and remove the corresponding resource from the template.

How is the function scaled?

To increase the number of parallel instances: the number of messages are ordered in one partition. By default, messages are distributed in round robin manner. Each Function instance is backed by 1 EventProcessorHost (EPH). EventHub only allows 1 EPH to hold a lease on a partition, but >1 partition can be assigned an EPH. This means the number of EPH <= number of partitions in EventHub. Hence, increasing number of partitions in Event Hub(default maxlimit is 32, you can raise a support request to increase it further). For more information, see the Azure Trigger Scaling document.

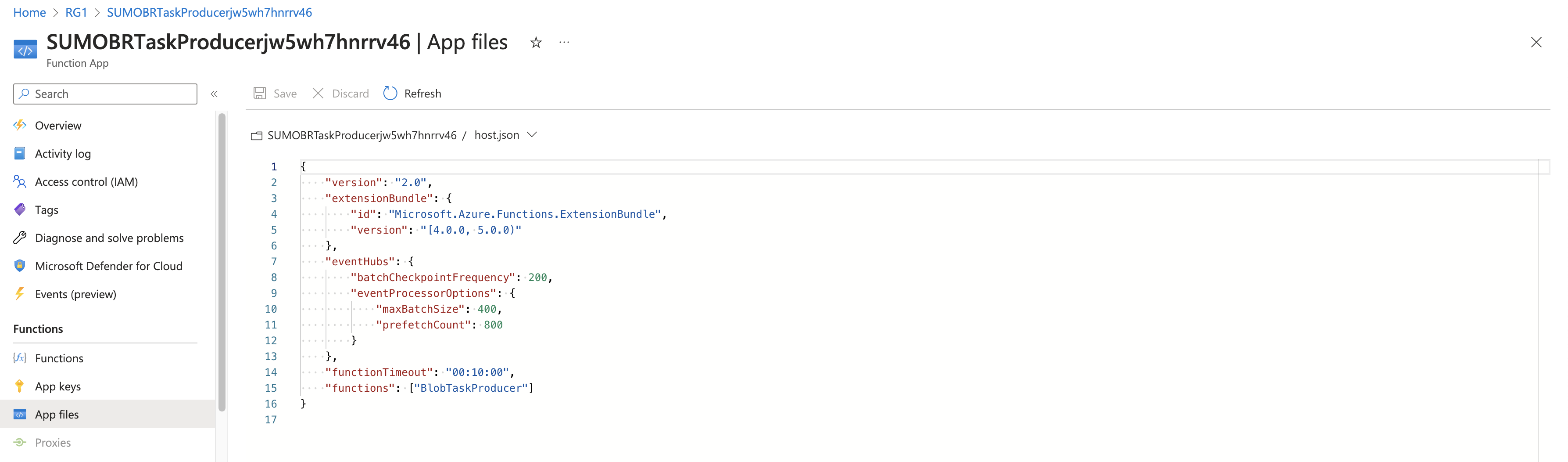

To increase the number of fetched messages per function: increase the maxBatchSize(The maximum event count received per receive loop) property in host.json(default is 64) For more information, see the Azure Trigger - host.json properties document.

How much does it cost?

For specific details, see the following Azure pricing pages:

Functions Example:

Function executes 3 million times during the month with memory consumption of 512 MB, execution duration 1 sec and 64KB is the message size.

Resource consumption: (512 MB / 1,024(in GB)) * 1sec * 3 million = 1.5 million GB-sec - 4,00,000 GB-s(free plan) = 1.1 million GB-sec => x 0.000016$/GB-s = 17.6$

Execution consumption: 3 million - 1 million(free plan) = 2 million => x 0.20$ = 0.40$

Cost of Azure Function : 18$

Event Hub Example:

Ingress events(billed in multiples of 64 KB): 3 million * $0.028 per million events = 0.084$

Throughput units(1TU = 1MB/sec ingress events + 2MB/sec egress events + 84GB event storage aggregated across all eventhubs in a namespace): $0.015/hour * 30*24 = 10.8$

Cost of Event Hub = 10.88$

Storage Account Example:

Assuming General purpose v2 storage accounts in hot tier and worst case scenario of storing all event in storage with 1 message processed per receive loop by azure function.

Storage prices: $0.0208 per GB => x (64KB3 million)/(1024*1024) Gb per month = 3.66$

Operation prices write operation $0.05 per 10000 requests => * 3 million = 15$

Cost of Storage Accounts = 18.66$

Total = 47.5$

How do I troubleshoot an Event Hub Integration?

-

Logs Collection Pipeline: Refer the Troubleshooting section in Azure Event Hubs Source for Logs.

-

Metrics Collection Pipeline: Refer the Troubleshooting metrics collection in Collect Metrics from Azure Monitor. You can also manually trigger the azure function using Sample Logs Payload & Sample Metrics Payload.

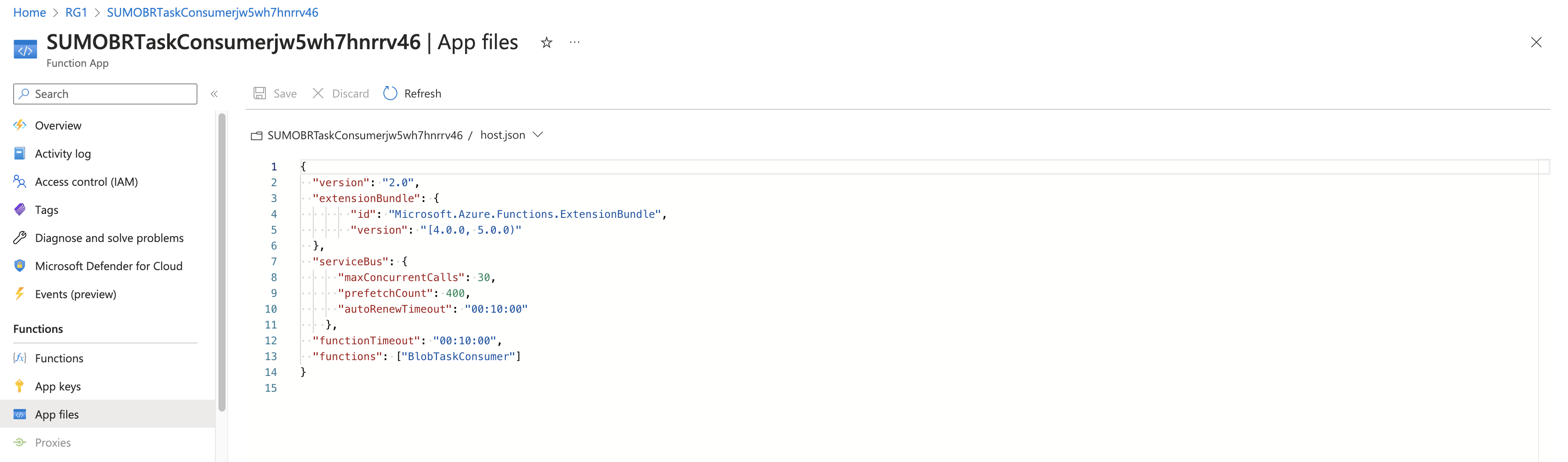

What can I do if a function is timing out?

The default timeout for functions on a Consumption plan is 5 minutes. You can increase the value to 10 minutes for the Function App by changing the functionTimeout property in the host.json file.

How do I enable Application Insights?

Follow the instructions in the Microsoft Azure documentation for Enabling Application Insights integration.

How can I debug using print statements?

- Under the Function app edit mode section, change the edit mode in Function App Settings. You can now edit the function.

- Enter context.log(“debug statements”) and save.

- Run using sample logs, as described in Troubleshooting log collection.

How do I ensure that the Event Hub is receiving log messages?

If events are not getting into the Event Hub, the event grid subscription publisher settings are not configured properly.

Event Hub export error messages

-

Resources should be in the same region. Resource

/subscriptions/c088dc46-d692-42ad-a4b6-9a542d28ad2a/resourceGroups/AzureAuditEventHub/providers/Microsoft.Network/networkSecurityGroups/testhimvm-nsgis in regioneastusand resource/subscriptions/c088dc46-d692-42ad-a4b6-9a542d28ad2a/resourcegroups/testresourcegroup/providers/microsoft.eventhub/namespaces/sumoazureaudittf7grgv4prygwis in regionwestus. This happens while exporting logs or metrics from Azure monitor to Event Hub. The service generating the logs and Event Hub should be deployed in the same region. -

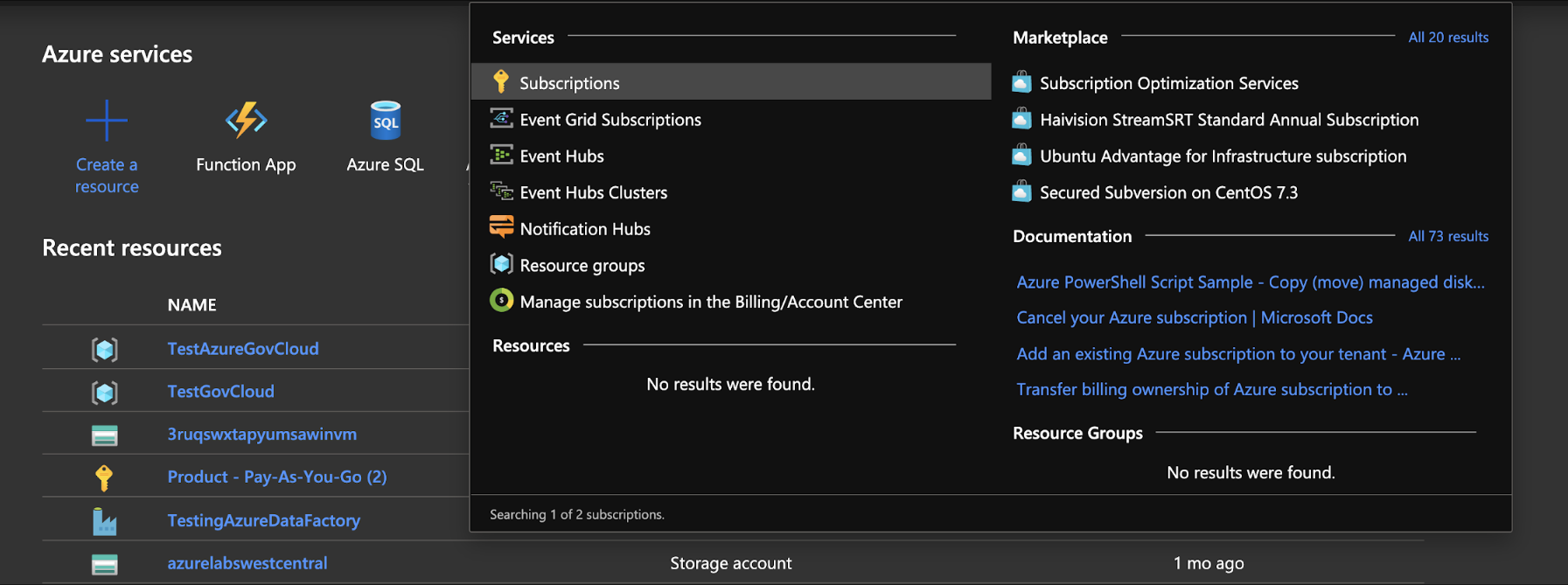

Create or update activity logprofilesfailure. If you get this error message in Azure when setting up an Event Hub Export, do the following:

- Search for Subscriptions in all services.

- Select your subscription > Resource Providers.

- Search for and Enable microsoft.insights.

-

If you receive an Azure error similar to the following when exporting logs, it means that Azure Active Directory is not associated with an Azure subscription. Follow the instructions to Associate or add an Azure subscription to your Microsoft Entra tenant.

An Azure subscription is required to use this capability.

Please create an Azure subscription to get started.

Blob Storage FAQs

This page provides answers for frequently asked integration questions about Azure Blob Storage.

What is FileOffsetMap?

FileOffsetMap is a table created in Azure Table Storage that is used for internal bookkeeping. The events generated from Storage Account only contain the blob size, so the Azure functions receive event messages containing sizes such as 30 bytes, 40 bytes, and 70 bytes in random order along with blob path. Sumo Logic stores a mapping for each file and the current offset to determine the next range.

How does the collection mechanism work?

For a summary of how various components are stitched together in the pipeline, see the Monitoring data flow section for block blob and append blob of the Azure Blob Storage page.

How do I scale the function?

From the Application settings page, you can do any of the following to scale the function:

- Increasing the maxBatchSize in the BlobTaskProducer host.json from function app settings. This fetches more events and creates larger blocks for reading.

- Increasing maxConcurrentCalls calls setting in the BlobTaskConsumer host.json. It is recommended that you increase it in smaller increments so as to not hit the throttling limit.

- Increasing the prefetchcount to 2*maxBatchSize.

How do I ingest logs from Azure Blob Storage into multiple sourceCategories?

The following is a Field Extraction Rule (FER) solution.

You extract the container name in FERs and override the _sourceCategory with _sourceCategory/<containername> so that when a user searches the new sourcecategory is used. For example:

_sourceCategory = azure_logs | json auto | parse field=resource_id "/NETWORKSECURITYGROUPS/*"

as nsg_name | concat('azure_logs/", nsg_name) as _sourceCategory

Another approach is to modify the function to send source category in headers. For more information, see How do I route logs to different source categories based on log content?

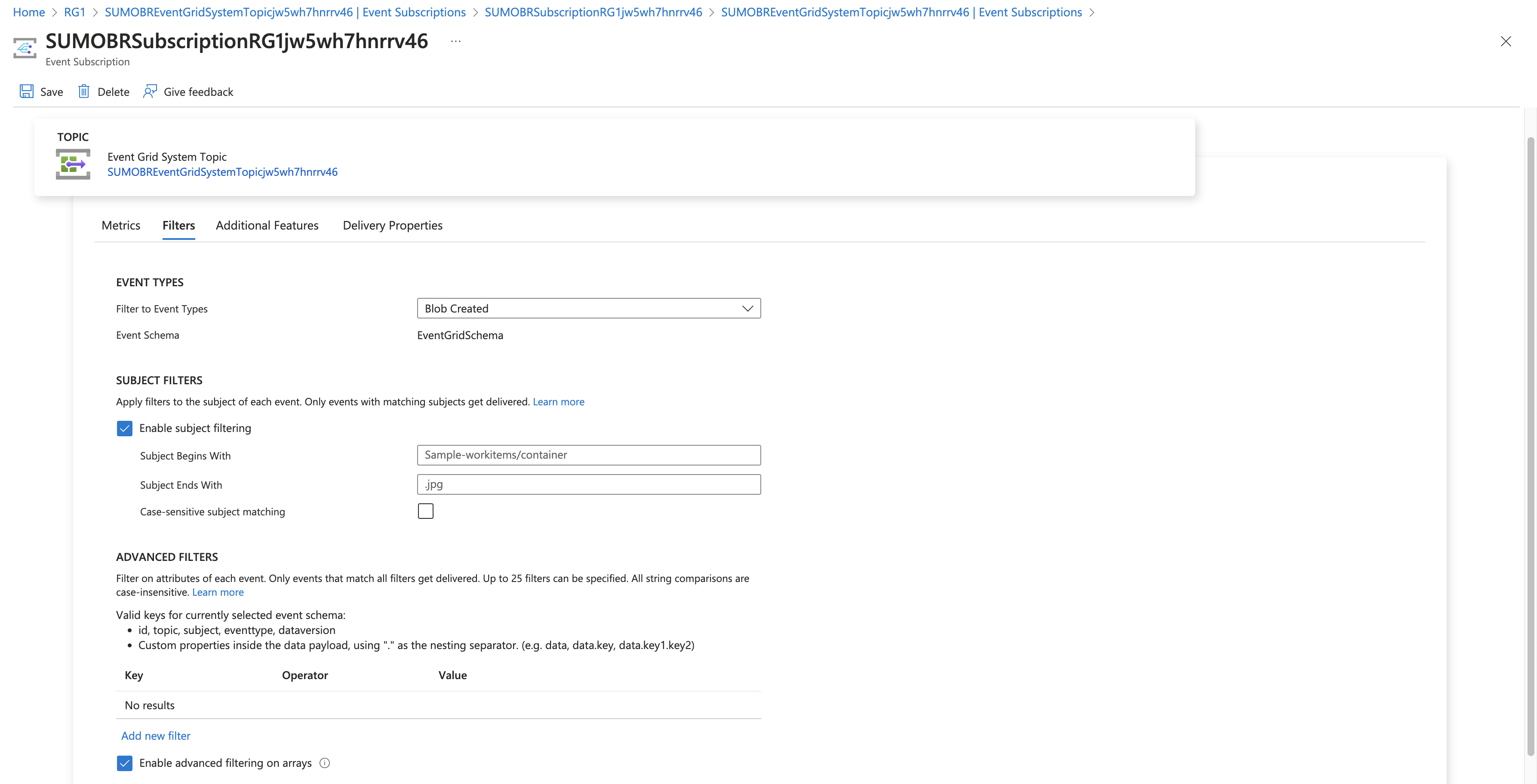

How do I filter events by container name?

To filter events by container name, do the following:

- Go to Event subscription > Filters tab.

- Enter the following in the Subject Begins With field, replacing

<container_name>with the name of the container from where you want to export logs./blobServices/default/containers/<container_name>/

How do I troubleshoot Blob Storage integration?

- Verify Block Blob Create Events are getting published - If events are not getting created, then either no new blobs are getting created or the event grid subscription subscriber settings is not configured right. For example, the regex for container does not match or the event grid service could be down.

- Verify Event Hub is receiving log messages - If events are not getting into the Event Hub, then the event grid subscription publisher settings are not configured properly.

- Verify Service Bus Queue is receiving tasks - If service bus is not receiving data. there might be something wrong with SUMOBRTaskProducer function. Check the function's invocation logs. For example, the event payload format may have been changed by Microsoft, it's not able to write to service bus, or the service bus may be down.

- Verify with live tail - If you are getting logs into sumo and everything else checked out, then there might be an issue in SUMOBRTaskConsumer function. Check the function's invocation logs. For example, it may not be able to read the from Storage Account, the blob may have been deleted before it was read, or the log format may not be supported.

Blob Reader error messages

-

Error: The request is being throttled

at client.pipeline.error (D:\home\site\wwwroot\BlobTaskConsumer\node_modules\azure-arm-storage\lib\operations\storageAccounts.js:1444:19) at retryCallback (D:\home\site\wwwroot\BlobTaskConsumer\node_modules\ms-rest\lib\filters\systemErrorRetryPolicyFilter.js:89:9) at retryCallback (D:\home\site\wwwroot\BlobTaskConsumer\node_modules\ms-rest\lib\filters\exponentialRetryPolicyFilter.js:140:9) at D:\home\site\wwwroot\BlobTaskConsumer\node_module...FunctionName: BlobTaskConsumerSolution: Increase the maxBatchSize in BlobTaskProducer's host.json This will fetch more events and will create larger blocks for reading. Then, decrease maxConcurrentCalls calls setting in BlobTaskConsumer's host.json. This will limit the number of concurrent invocations, reducing the number of read requests.

-

FileOffSetMap Table does not exist

Error: HTDECK-JOBCOSTING-API__BE93-2019-05-08-14-e5260b.log"": [48255]} Exception while executing function: Functions.BlobTaskProducer Microsoft.Azure.WebJobs.Host. FunctionInvocationException : Exception while executing function: Functions.BlobTaskProducer ---> System.Exception : StorageError: The table specified does not exist. RequestId:3914a31a-e002-000e-1dad-05a995000000 Time:2019-05-08T14:48:29.9940095Z at async Microsoft.Azure.WebJobs.Script.Description.NodeFunctionInvoker.InvokeCore(Object[] parameters,FunctionInvocationContext context) at C:\projects\azure-webjobs-sdk-script\src\WebJobs.Script\Description\Node\NodeFunctionInvoker.cs : 196Solution: This error comes when FileOffsetMap does not exists. Check and confirm whether you have created the following table in Step 3: Configure Azure resources using ARM template, substep 11.

-

You'll see a Deployment Failed error when roleAssignment is not unique but we are already using resourcegroup.id in a name that is unique. The error details are:

Tenant ID, application ID, principal ID, and scope are not allowed to be updated. (Code: RoleAssignmentUpdateNotPermitted)For more information, see Create roleAssignment fails with error "Tenant ID, application ID, principal ID, and scope are not allowed to be updated".

Solution: Create a new resource group for the Sumo Logic collection resources. If that doesn't fix the problem, then change the variables in the ARM template from this:

"consumer_roleGuid": "[guid(parameters('sites_blobreaderconsumer_name'), uniqueString(deployment().name, resourceGroup().id))]", \

"dlq_roleGuid": "[guid(parameters('sites_DLQProcessor_name'), uniqueString(deployment().name, resourceGroup().id))]", \To this:

"consumer_roleGuid": "[guid(parameters('sites_blobreaderconsumer_name'), uniqueString(‘<random unique word>’, resourceGroup().id))]", \

"dlq_roleGuid": "[guid(parameters('sites_DLQProcessor_name'), uniqueString(‘<random unique word>’, resourceGroup().id))]" -

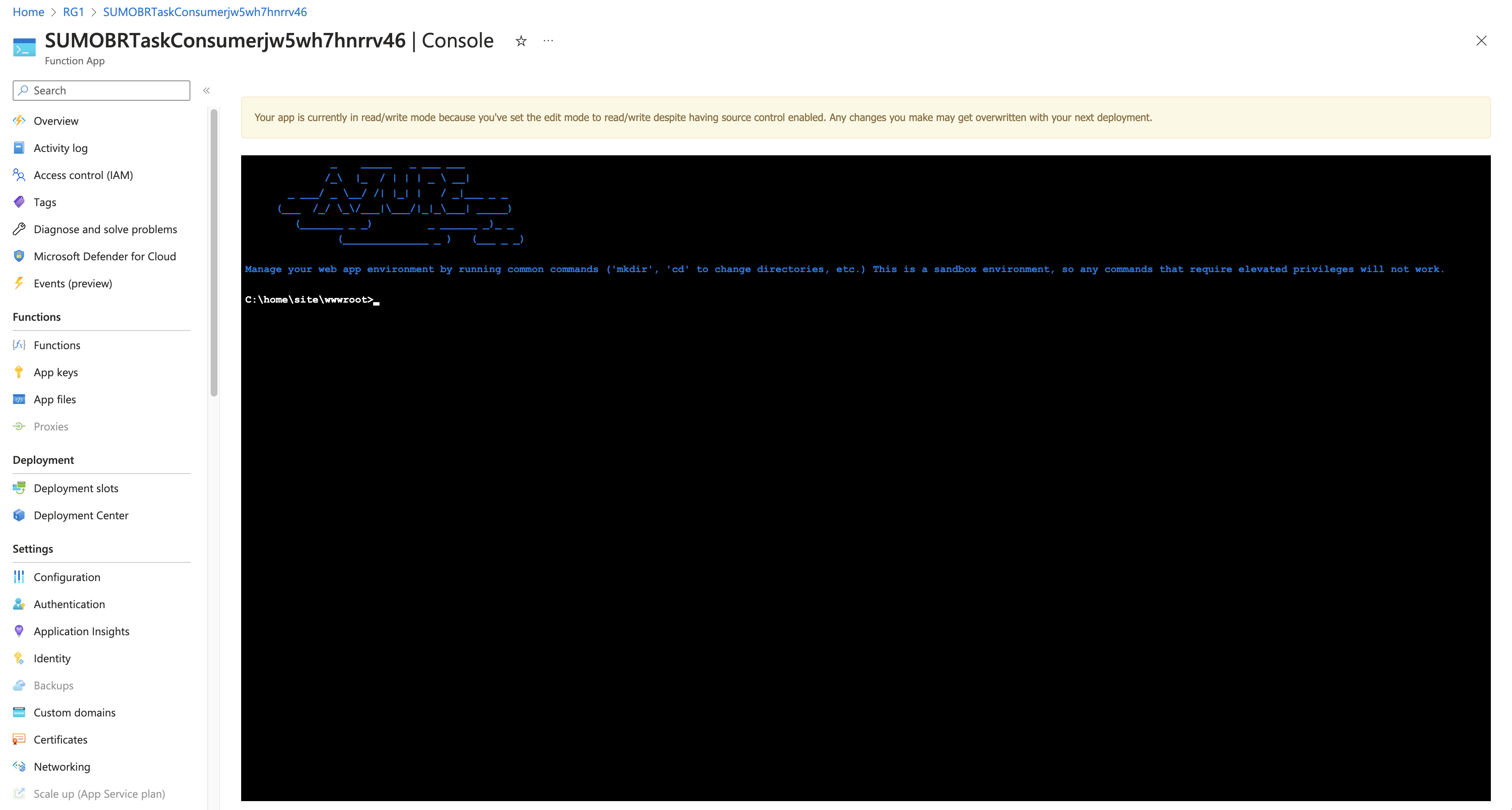

Error: Azure fails to install dependencies on a node.

System.AggregateException : One or more errors occurred.

---> Error: Cannot find module 'azure-storage' \Solution: Run

npm installfrom the console.

-

Subscription for resource type not registered

Error: Subscription for Microsoft.EventGrid is not registered.Solution: To register the provider, do the following:

- Go to subscriptions.

- Select the subscription name where ARM template is deployed.

- Select the Resource providers under settings on the left.

- Search for Microsoft.EventGrid and register it.

-

Native Access Violation in Azure Functions

ExitCode C0000005

ExitCodeString NATIVE ACCESS VIOLATION

Managed Exception = System.AccessViolationException:Attempted to read or

write protected memory. This is often an indication that other memory is corrupt.

CallStack - Managed ExceptionThe above error occurs in certain situations the runtime initiates a host shutdown via

HostingEnvironment.InitiateShutdown, for example, when an unhandled global exception occurs, when a functionTimeoutExceptionis thrown, or when performance counter thresholds are exceeded (HostHealthMonitor).Solution: If you're using this function for quite some time then we recommend redeploying the solution with new ARM templates.

If the error still persists, then you can migrate from Consumption plan to Premium plan by making changes in the ARM template.

{

"type":"Microsoft.Web/serverfarms",

"kind":"app",

"name":"[parameters('serverfarms_SumoAzureLogsAppServicePlan_name')]",

"apiVersion":"2018-02-01",

"location":"[resourceGroup().location]",

"sku":{

"name":"P1v2",

"tier":"PremiumV2",

"size":"P1v2",

"family":"Pv2",

"capacity":2

},

"properties":{

"maximumElasticWorkerCount":1,

"perSiteScaling":false,

"targetWorkerCount":0,

"targetWorkerSizeId":0,

"reserved":false,

"isSpot":false,

"isXenon":false,

"hyperV":false

},

"dependsOn":[

]

} -

Error in initiation of Azure functions created by ARM template with error message

System.Private.CoreLib: Access to the path 'C:\home\site\wwwroot' is deniedThis will also result unauthorized error in error logs for azure function. Every azure function always has a storage account associated with it for dumping logs, trigger event , metadata etc. Our arm template also creates 3 azure function and a single storage account (lets call it sumoBRlogs storage account). When this storage account access is restricted (not public) then this problem occurs.

The solution is to do a virtual network (vnet) integration of azure function and allow the access to this virtual network to the sumoBRlogs storage account. Follow these steps to do a vnet integration. And set this environment variable to 1, in all the three azure function created by ARM template - Producer, consumer and DLQ.