Use JSON to Configure Sources

Installed Collector and Hosted Collector sources can be configured by using UTF-8 encoded JSON files. Installed Collectors can use JSON files to configure its Sources when using Local Configuration File Management. You can also configure Sources for Hosted and Installed Collectors with the Collector Management API.

This feature is not supported for our OpenTelemetry Collector.

Defining a Source JSON file

JSON files must be UTF-8 encoded following RFC 8259.

When registering a Collector, you can define a source JSON file using the sources or syncSources parameter in your

user.properties or sumo.conf configuration file. These parameters are used the first time a collector is set up.

| Parameter | Type | Description |

|---|---|---|

| sources | String | Sets the JSON file describing sources to configure on registration. To make changes to collector sources after the Collector has been configured, you can use the Collector Management API or the Sumo web application. |

| syncSources | String | Sets the JSON file describing sources to configure on registration, which will be continuously monitored and synchronized with the Collector's configuration. |

For more information on setting the syncSources parameter, see Local Configuration File Management.

Using JSON to configure multiple Sources

You can use JSON to configure multiple sources in either of the following ways:

- Create a single JSON file with the configuration information for all the sources (sources.json).

- Create individual JSON files, one for each source, and then combine them in a single folder. You then configure the source folder instead of the individual sources.

The maximum number of Sources allowed on a Collector is 1,000.

See Options for specifying sources in local configuration file(s) for more information.

Types of Sources

Each source can have its own unique fields in addition to the generic fields listed in the previous table. The next table lists the valid field types. The sections that follow list the unique parameters for each and the associated JSON examples.

Installed Collectors

Log Sources

| Field Type | Type Value |

|---|---|

| Local File Source | LocalFile |

| Remote File Source | RemoteFileV2 |

| Local Windows Event Log Source | LocalWindowsEventLog |

| Remote Windows Event Log Source | RemoteWindowsEventLog |

| Local Windows Performance Source | LocalWindowsPerfMon |

| Remote Windows Performance Source | RemoteWindowsPerfMon |

| Windows Active Directory Inventory Source | ActiveDirectory |

| Syslog Source | Syslog |

| Script Source | Script |

| Docker Log Source | DockerLog |

Metrics Sources

| Field Type | Type Value |

|---|---|

| Host Metrics Source | SystemStats |

| Streaming Metrics Source | StreamingMetrics |

| Docker Stats Source | DockerStats |

Hosted Collectors

Log Sources

| Field Type | Type Value |

|---|---|

| Akamai SIEM API Source | Universal |

| Amazon S3 Source | Polling |

| AWS S3 Archive Source | Polling |

| AWS CloudFront Source | Polling |

| AWS CloudTrail Source | Polling |

| AWS Elastic Load Balancing Source | Polling |

| AWS Kinesis Firehose for Logs Source | HTTP |

| Amazon S3 Audit Source | Polling |

| AWS Metadata (Tag) Source | Polling |

| Azure Event Hubs Source | Universal |

| Carbon Black Cloud Source | Universal |

| Carbon Black Inventory Source | Universal |

| Cloud Syslog Source | Cloudsyslog |

| Cisco AMP Source | Universal |

| Cisco Meraki Source | Universal |

| Crowdstrike FDR Source | Universal |

| CrowdStrike Source | Universal |

| Cloud SIEM AWS EC2 Inventory Source | Universal |

| Cybereason Source | Universal |

| Duo Source | Universal |

| Google Cloud Platform Source | HTTP |

| HTTP Source | HTTP |

| Microsoft Graph Security API Source | Universal |

| Mimecast Source | Universal |

| Netskope Source | Universal |

| Okta Source | Universal |

| OLTP Source | HTTP |

| Palo Alto Cortex XDR | Universal |

| Proofpoint On Demand Source | Universal |

| Proofpoint TAP Source | Universal |

| Salesforce Source | Universal |

| Sophos Central Source | Universal |

| Tenable Source | Universal |

Metrics Sources

| Field Type | Type Value |

|---|---|

| AWS CloudWatch Source | Polling |

| AWS Kinesis Firehose for Metrics Source | HTTP |

| OLTP Source | HTTP |

Common parameters for log source types

The following parameters are used for log Sources except for Syslog. Syslog Sources do not support Multiline Detection, which means the common parameters multilineProcessingEnabled, useAutolineMatching and manualPrefixRegexp are not applicable. If you provide these in the configuration they will be ignored.

| Parameter | Type | Required? | Default | Description | Access |

|---|---|---|---|---|---|

sourceType | String | Yes | Type the correct type of Source. | not modifiable | |

name | String | Yes | Type a desired name of the Source. The name must be unique per Collector. This value is assigned to the built-in metadata field _source and can be a maximum of 128 characters. | modifiable | |

description | String | No | null | Type a description of the Source. | modifiable |

fields | JSON Object | No | null | JSON map of key-value fields (metadata) to apply to the Collector or Source. | modifiable |

hostName | String | No | null | Type a host name of the Source. This value is assigned to the built-in metadata field _sourceHost. The hostname can be a maximum of 128 characters.Not supported with Windows Local Event Source and Windows Local Performance Source. | modifiable |

category | String | No | null | Type a category of the source. This value is assigned to the built-in metadata field _sourceCategory. See best practices for details. | modifiable |

Timestamp Processing

| Parameter | Type | Required? | Default | Description | Access |

|---|---|---|---|---|---|

automaticDateParsing | Boolean | No | true | Determines if timestamp information is parsed or not. Type true to enable automatic parsing of dates (the default setting); type false to disable. If disabled, no timestamp information is parsed at all. | modifiable |

timeZone | String | No | null | Type the time zone you'd like the source to use in TZ database format. Example:"America/Los_Angeles". See time zone format for details. | modifiable |

forceTimeZone | Boolean | No | false | Type true to force the Source to use a specific time zone, otherwise type false to use the time zone found in the logs. The default setting is false. | modifiable |

defaultDateFormat | String | No | null | (Deprecated) The default format for dates used in your logs. For more information about timestamp options, see Timestamps, Time Zones, Time Ranges, and Date Formats. See the replacement object, defaultDateFormats, below. | modifiable |

defaultDateFormats | Object array | No | null | Define formats for the dates present in your log messages. You can specify a locator regex to identify where timestamps appear in log lines. The defaultDateFormats object has two elements: format (required)—Specify the date format.locator (optional)—A regular expression that specifies the location of the timestamp in your log lines. For example, \[time=(.*)\]For an example, see Timestamp example, below. For more information about timestamp options, see Timestamps, Time Zones, Time Ranges, and Date Formats | modifiable |

Multiline Processing

| Parameter | Type | Required? | Default | Description | Access |

|---|---|---|---|---|---|

multilineProcessingEnabled | Boolean | No | true | Type true to enable; type false to disable. The default setting is true. Consider setting to false to avoid unnecessary processing if you are collecting single message per line files (for example, Linux system.log). If you're working with multiline messages (for example, log4J or exception stack traces), keep this setting enabled. | modifiable |

useAutolineMatching | Boolean | No | true | Type true to enable if you'd like message boundaries to be inferred automatically; type false to prevent message boundaries from being automatically inferred (equivalent to the Infer Boundaries option in the UI). The default setting is true. | modifiable |

manualPrefixRegexp | String | No | null | When using useAutolineMatching=false, type a regular expression that matches the first line of the message to manually create the boundary. Note that any special characters in the regex, such as backslashes or double quotes, must be escaped. For example, this expression:^\[\d{4}-\d{2}-\d{2}\s+\d{2}:\d{2}:\d{2}\.\d{3}\].*should be escaped like this: ^\\[\\d{4}-\\d{2}-\\d{2}\\s+\\d{2}:\\d{2}:\\d{2}\\.\\d{3}\\].* | modifiable |

Processing Rules

| Parameter | Type | Required? | Default | Description | Access |

|---|---|---|---|---|---|

filters | array | No | [ ] | If you'd like to add a filter to the Source, type the name of the filter (Exclude, Include, Mask, Hash, or Forward). Review the Rules and Limitations for filters and see Creating processing rules using JSON. | modifiable |

hashAlgorithm | string | No | md5 | Refer to Hash Rules. You can also add available values ["MD5", "SHA-256"]. | modifiable |

When collection should begin

| Parameter | Type | Required? | Default | Description | Access |

|---|---|---|---|---|---|

cutoffTimestamp | Long | No | 0 (collects all data) | Can be specified instead of cutoffRelativeTime to only collect data more recent than this timestamp, specified as milliseconds since epoch (13 digit). You can use this site to convert to epoch time: http://www.epochconverter.com/ Times in the future are supported. For a Local File Source, this cutoff applies to the "modified" time of the file, not the time of the individual log lines. For example, if you have a file that contains logs with timestamps spanning an entire week and set the cutoffTimestamp to two days ago, all of the logs from the entire week will be ingested since the file itself was modified more recent than the cutoffTimestamp. A processing rule could be used to filter logs that match unneeded log messages.Review timestamp considerations to understand how Sumo interprets and processes timestamps. (Note that if you set this property to a timestamp that overlaps with data that was previously ingested on a source, it may result in duplicated data to be ingested into Sumo Logic.) | modifiable |

cutoffRelativeTime | String | No | Can be specified instead of cutoffTimestamp to provide a relative offset with respect to the current time.time can be either months (M), weeks (w), days (d), hours (h), or minutes (m). Use 0m to indicate the current time.Times in the future are not supported.Example: use -1h, -1d, or -1w to collect data that's less than one hour, one day, or one week old, respectively. For a Local File Source, this cutoff applies to the "modified" time of the file, not the time of the individual log lines. For example, if you have a file that contains logs with timestamps spanning an entire week and set the cutoffRelativeTime to two days ago, all of the logs from the entire week will be ingested since the file itself was modified more recent than the cutoffRelativeTime. A processing rule could be used to filter logs that match unneeded log messages. Review timestamp considerations to understand how Sumo interprets and processes timestamps. (Note that if you set this property to a relative time that overlaps with data that was previously ingested on a source, it may result in duplicated data to be ingested into Sumo Logic.) | not modifiable |

Non-configurable parameters

The following parameters are automatically configured by the Sumo Logic Service. Don't include them in the sources JSON file, except for when making API requests. When making an API request you will need to provide the id parameter in the JSON file.

- id

- alive - This parameter is updated based on if Sumo receives a heartbeat message every 15 seconds. A heartbeat checks for successful connectivity. If no successful heartbeat message is received after 30 minutes this becomes false.

- status

Time zone format

In a JSON source configuration, a string for the timeZone setting does not follow the same format as the time zone setting shown in Sumo Logic. The JSON timeZone property uses the underlying TZ database time zone format instead of (GMT+11:00) style values.

Example:

"timeZone": "America/Los_Angeles",

You can find a list of time zone environment variables in this Wikipedia article.

Timestamp example

The following is a Timestamp example in JSON with two default date formats, yyyy-MM-dd HH:mm:ss and yyMMdd HH:mm:ss:

{

"source": {

"name": "test",

"defaultDateFormats": [{

"format": "yyyy-MM-dd HH:mm:ss",

"locator": "time=(.*),"

}, {

"format": "yyMMdd HH:mm:ss"

}]

}

}

Creating processing rules using JSON

You can include processing (filtering) rules when using JSON to configure sources. A filter specifies rules about which messages are sent to Sumo Logic.

- Exclude. Removes messages before ingestion to Sumo Logic. Think of Exclude as a "denylist" filter. For more information, see Include and Exclude Rules.

- Include. Sends only the data you explicitly define to Sumo Logic. Think of Include as an "allowlist" filter. For more information, see Include and Exclude Rules.

- Hash. Replaces a message with a unique, randomly-generated code to protect sensitive or proprietary information, such as credit card numbers or user names. By hashing this type of data you can still track it, even though it's fully hidden. For more information, see Hash Rules.

- Mask. Replaces an expression with a mask string that you can customize; especially useful for protecting passwords or other data you wouldn't normally track. For more information, see Mask Rules.

- Forward. Sends matching log messages to a data forwarding destination. For more information, see Example: data forwarding rule below.

| Parameter | Type | Required? | Description | Access |

|---|---|---|---|---|

name | String | Yes | A name for the rule. | Modifiable |

filterType | Yes | The filter type. Must be one of the following: Exclude, Include, Hash, Mask, or Forward. | Modifiable | |

regexp | String | Yes | A regular expression used to define the filter. If filterType = Mask or Hash, this regular expression must have at least one matching group, specifying the regions to be replaced by a mask or hash. For multiline messages, add single line modifiers (?s) to the beginning and end of the expression to support matching your string regardless of where it occurs in the message. For example: (?s).*secur.*(?s)Syslog UDP messages may contain a trailing newline character, which will require the above regular expression to properly match your string. | Modifiable |

mask | String | Yes | when | filterType = "Mask" |

transparentForwarding | Boolean | No | Syslog forwarding by default prepends a timestamp and hostname to messages to ensure they comply with RFC 3164. If your syslog messages already comply, you can disable this feature by specifying this parameter as false. | Modifiable |

Example: exclude filter

The following is an example of a filter to exclude messages containing a specified keyword.

"filters":[{

"filterType":"Exclude",

"name":"filter_auditd",

"regexp":".*exe=\"\\/usr\\/sbin\\/crond\".*terminal=cron\\sres=success.*"

}],

When excluding messages based on a string that contains special characters, for example *("test")*,you will need to double-escape the special characters so they're valid within the JSON.

Filter name cannot exceed 32 characters.

Example message content to filter:

*("test")*

Standard Regex (this is the syntax if you create the filter using the UI):

\*\("test"\)\*

Filter syntax in JSON:

\\*\\(\"test\"\\)\\*

Filter example in JSON with double-escaped special characters:

{

"source": {

"name": "test",

"filters": [{

"filterType": "Exclude",

"name": "Filter keyword",

"regexp": "\\*\\(\"test\"\\)\\*"

}]

}

}

Example: mask filter

The following is an example of a filter to mask messages containing an authorization token.

Example message content to filter:

auth":"Basic cABC123vZDAwfvDldmlfZ568dWQ6vvhjER4dgyR33lP"

Standard Regex, this is the syntax if you create the filter using the UI:

auth"\s*:\s*"Basic\s*([^"]+)"

Filter syntax in JSON:

auth\"\\s*:\\s*\"Basic\\s*([^\"]+)\"

Filter example in JSON with double-escaped special characters:

"filters":[{

"filterType":"Mask",

"name":"masktoken",

"regexp":"auth\"\\s*:\\s*\"Basic\\s*([^\"]+)\"",

"mask":"##TOKEN##"

},

Example: data forwarding rule

In the JSON below for a source, the filters array specifies a data forwarding rule. Before you can configure a data forwarding rule in JSON, you must obtain the sinkId for the data forwarding data destination. For instructions, see Get sinkId for a data forwarding destination below.

{

"api.version": "v1",

"sources": [{

"sourceType": "Syslog",

"name": "example",

"port": 514,

"protocol": "TCP",

"encoding": "UTF-8",

"category": "example",

"useAutolineMatching": false,

"multilineProcessingEnabled": false,

"timeZone": "UTC",

"automaticDateParsing": true,

"forceTimeZone": false,

"defaultDateFormat": "dd/MMM/yyyy HH:mm:ss",

"filters": [{

"filterType": "Forward",

"name": "example",

"regexp": "(?s).*(?s)",

"sinkId": 22,

"transparentForwarding": false

}]

}]

}

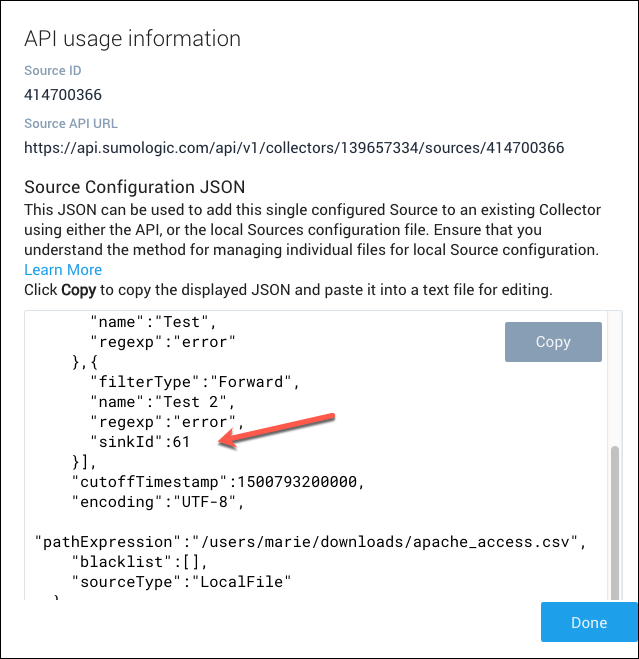

Get sinkId for a data forwarding destination

To determine the sinkId for a data forwarding destination, you use the Sumo web app to create a test data forwarding rule. Sumo updates the JSON configuration for the source with the sinkId of the destination you select. Then you can view the JSON configuration for the source, make a note of the sinkId, and then delete the test processing rule.

These instruction assume you have already created a data forwarding destination.

- Follow the instructions in Configure processing rules for data forwarding to add a data forwarding rule to a source on an installed collector. As part of this process, you will select the data forwarding destination to which you want to forward data.

- To view the JSON configuration for the source you updated in the previous step:

- New UI. In the Sumo Logic main menu select Data Management, and then under Data Collection select Collection. You can also click the Go To... menu at the top of the screen and select Collection.

Classic UI. In the main Sumo Logic menu, select Manage Data > Collection > Collection. - Click the icon to the right of the source. The API usage information panel appears. Make a note of the sinkId in the filter section of the JSON.

- New UI. In the Sumo Logic main menu select Data Management, and then under Data Collection select Collection. You can also click the Go To... menu at the top of the screen and select Collection.

- Click the icon to the right of the Source. Make a note of the sinkId in the filter section of the JSON.

- Click Done to close the API usage information panel.

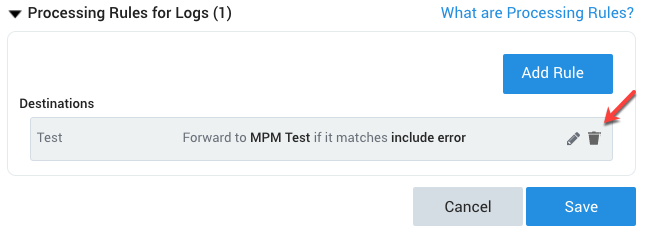

- Now that you have determined the sinkId for the data forwarding destination, delete the test rule.

- New UI. In the Sumo Logic main menu select Data Management, and then under Data Collection select Collection. You can also click the Go To... menu at the top of the screen and select Collection.

Classic UI. In the main Sumo Logic menu, select Manage Data > Collection > Collection. - Navigate to the source to which you added the test rule.

- In the Processing Rules section of the page, click the delete icon to the right of the test rule.

- New UI. In the Sumo Logic main menu select Data Management, and then under Data Collection select Collection. You can also click the Go To... menu at the top of the screen and select Collection.

Now that you have the sinkId for the data forwarding destination, you can define the filter array in the JSON for your source, following the example in Example: Data Forwarding Rule above.